Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

Computer science articles from across Nature Portfolio

Computer science is the study and development of the protocols required for automated processing and manipulation of data. This includes, for example, creating algorithms for efficiently searching large volumes of information or encrypting data so that it can be stored and transmitted securely.

Deep learning at the forefront of detecting tipping points

A deep learning-based method shows promise in issuing early warnings of rate-induced tipping, of particular interest in anticipating effects due to anthropogenic climate change.

- Partha Sharathi Dutta

Latest Research and Reviews

GrandQC: A comprehensive solution to quality control problem in digital pathology

Histological slides often contain artifacts that affect the performance of downstream image analysis. Here, the authors present GrandQC, a tool that enables high-precision tissue and artifact segmentation in histological slides. This tool can be used to monitor sample preparation and scanning quality across pathology departments.

- Zhilong Weng

- Alexander Seper

- Yuri Tolkach

An integrative data-driven model simulating C. elegans brain, body and environment interactions

BAAIWorm is an integrative data-driven model of C. elegans that simulates interactions between the brain, body and environment. The biophysically detailed neuronal model is capable of replicating the zigzag movement observed in this species.

- Mengdi Zhao

- Tiejun Huang

Kernel approximation using analogue in-memory computing

A kernel approximation method that enables linear-complexity attention computation via analogue in-memory computing (AIMC) to deliver superior energy efficiency is demonstrated on a multicore AIMC chip.

- Julian Büchel

- Giacomo Camposampiero

- Abu Sebastian

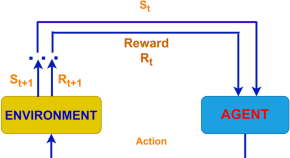

A reinforcement learning approach for reducing traffic congestion using deep Q learning

- S M Masfequier Rahman Swapno

- SM Nuruzzaman Nobel

- Mohamed Tounsi

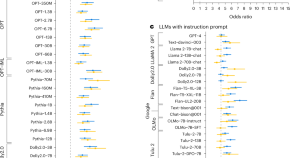

Generative language models exhibit social identity biases

Researchers show that large language models exhibit social identity biases similar to humans, having favoritism toward ingroups and hostility toward outgroups. These biases persist across models, training data and real-world human–LLM conversations.

- Tiancheng Hu

- Yara Kyrychenko

- Jon Roozenbeek

Quantum error correction below the surface code threshold

- Rajeev Acharya

- Dmitry A. Abanin

- Nicholas Zobrist

News and Comment

Better data sets won’t solve the problem — we need AI for Africa to be developed in Africa

Language models developed by big technology companies consistently underperform in African languages. It’s time to focus on local solutions.

- Nyalleng Moorosi

The AI revolution is running out of data. What can researchers do?

AI developers are rapidly picking the Internet clean to train large language models such as those behind ChatGPT. Here’s how they are trying to get around the problem.

- Nicola Jones

What should we do if AI becomes conscious? These scientists say it’s time for a plan

Researchers call on technology companies to test their systems for consciousness and create AI welfare policies.

- Mariana Lenharo

Can AI make scientific data more equitable?

Biased and unrepresentative scientific data can lead to misleading conclusions and potentially harm patients. Artificial intelligence (AI) might be able to help make data more representative, but only if a standardized approach to assessing the quality of AI-generated data is established.

More-powerful AI is coming. Academia and industry must oversee it — together

AI companies want to give machines human-level intelligence, or AGI. The safest and best results will come when academic and industry scientists collaborate to guide its development.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

- Privacy Policy

Home » Computer Science Research Topics

Computer Science Research Topics

Table of Contents

Computer science research involves investigating theoretical foundations, methodologies, and applications of computing and information processing. It encompasses a broad range of topics, from designing algorithms to developing hardware systems and exploring human-computer interaction. This research aims to solve computational problems, improve system efficiencies, and contribute to technological innovations that benefit society.

1. Artificial Intelligence and Machine Learning

Artificial Intelligence (AI) and Machine Learning (ML) are among the most prominent areas in computer science. Research in this domain focuses on building intelligent systems that can perform tasks autonomously.

- Deep learning architectures and optimization techniques.

- Explainable AI for transparent decision-making.

- Applications of AI in healthcare, such as diagnostic tools.

- Natural language processing (NLP) and sentiment analysis.

- Relevance: These technologies power innovations like autonomous vehicles, personalized recommendations, and smart assistants.

2. Data Science and Big Data

Data science research explores techniques for analyzing and deriving insights from vast datasets. Big data research addresses challenges in storing, processing, and managing data at scale.

- Data mining algorithms and their applications.

- Real-time big data analytics for IoT systems.

- Visualization techniques for complex datasets.

- Ethical issues in big data, including privacy concerns.

- Relevance: This area impacts decision-making in sectors like business intelligence, public health, and urban planning.

3. Cybersecurity and Cryptography

Cybersecurity research aims to protect systems, networks, and data from unauthorized access, while cryptography focuses on secure communication techniques.

- Blockchain technology for secure data transactions.

- Quantum cryptography and its future applications.

- Malware detection using machine learning.

- Security protocols for IoT devices.

- Relevance: Research in this area safeguards critical infrastructure, financial systems, and personal data.

4. Robotics and Automation

Robotics research involves designing autonomous systems capable of performing tasks in various environments, from factories to outer space.

- Collaborative robots (cobots) for industrial automation.

- Swarm robotics for distributed problem-solving.

- Ethical considerations in robotics deployment.

- Advancements in robotic vision and perception.

- Relevance: Robotics is transforming industries like manufacturing, logistics, and healthcare through automation.

5. Human-Computer Interaction (HCI)

HCI research examines how humans interact with computers and designs user-friendly systems that enhance user experiences.

- Usability testing methodologies for software applications.

- Augmented and virtual reality (AR/VR) interfaces.

- Adaptive systems for accessibility in computing.

- Gamification techniques in education and training.

- Relevance: HCI research improves the usability of products ranging from websites to virtual reality applications.

6. Software Engineering

Software engineering research focuses on methodologies, tools, and best practices for software development.

- Agile and DevOps methodologies for scalable projects.

- Bug detection and automated debugging tools.

- Software architecture for distributed systems.

- Ethical considerations in software development.

- Relevance: This area ensures the creation of reliable, efficient, and maintainable software systems.

7. Quantum Computing

Quantum computing explores computational systems that use quantum-mechanical phenomena, promising revolutionary capabilities in processing power.

- Quantum algorithms for optimization and cryptography.

- Error correction techniques in quantum computing.

- Applications in material science and drug discovery.

- Quantum machine learning for advanced data analysis.

- Relevance: Quantum computing holds the potential to solve problems intractable for classical computers, impacting fields like logistics and artificial intelligence.

8. Cloud Computing and Edge Computing

Research in cloud and edge computing addresses scalable, distributed, and decentralized systems to meet modern computational demands.

- Security and privacy in cloud-based systems.

- Resource allocation strategies for edge devices.

- Serverless computing and its implications for development.

- IoT integration with edge and cloud platforms.

- Relevance: These technologies drive innovations in smart cities, e-commerce, and real-time applications.

9. Networking and Communication

Networking research investigates protocols, architectures, and security mechanisms for efficient data communication.

- 5G and beyond: challenges and opportunities.

- Network security and intrusion detection systems.

- Software-defined networking (SDN) for scalable infrastructures.

- Delay-tolerant networking for space communications.

- Relevance: Research in networking supports advancements in telecommunications, IoT, and cloud computing.

10. Bioinformatics and Computational Biology

Bioinformatics research applies computational techniques to biological data, addressing challenges in genomics, proteomics, and drug discovery.

- Sequence alignment algorithms for genome analysis.

- AI-driven drug discovery and development.

- Modeling of biological networks and systems.

- Personalized medicine through computational approaches.

- Relevance: This area bridges computer science and biology, offering solutions in healthcare and biotechnology.

Emerging Research Topics in Computer Science

- Green Computing: Optimizing energy use in data centers and devices to reduce environmental impact.

- AI Ethics: Addressing bias, fairness, and accountability in AI systems.

- Digital Twin Technology: Simulating physical systems for real-time analysis and optimization.

- Metaverse Development: Exploring the integration of virtual and physical realities.

- Post-Quantum Cryptography: Developing encryption systems resistant to quantum attacks.

Computer science research is at the forefront of technological advancement, addressing challenges that shape our digital future. By exploring areas such as artificial intelligence, cybersecurity, and quantum computing, researchers contribute to innovations that drive progress in various sectors. Whether focusing on theoretical foundations or practical applications, computer science offers a wealth of opportunities for impactful research.

- LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature , 521(7553), 436–444.

- Bishop, C. M. (2016). Pattern Recognition and Machine Learning . Springer.

- Shor, P. W. (1994). Algorithms for quantum computation: Discrete logarithms and factoring. Proceedings of the 35th Annual Symposium on Foundations of Computer Science , 124–134.

- Sutton, R. S., & Barto, A. G. (2018). Reinforcement Learning: An Introduction . MIT Press.

- Stallings, W. (2020). Cryptography and Network Security: Principles and Practice . Pearson.

- Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep Learning . MIT Press.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Medical Research Topic Ideas

Music Research Topics

Argumentative Research Paper Topics

Controversial Research Topics

Interesting Research Topics

Criminal Justice Research Topics

Computer Science News

Top headlines, latest headlines.

- Rethinking the Quantum Chip

- Multitasking in Quantum Machine Learning

- Cracking the Code for Materials That Can Learn

- AI Cracks Complex Engineering Problems Fast

- Computer Memory That Keeps Working Above 1000°F

- Training AI: Human Interactions Not Datasets

- Innovative Robot Navigation Inspired by Brain

- Accelerating Climate Modeling With Generative AI

- Next Gen High-Performance Flexible Electronics

- To Build Better Fiber Optic Cables, Ask a Clam

Earlier Headlines

Monday, december 2, 2024.

- Researchers Demonstrate Self-Assembling Electronics

- Photonic Processor Could Enable Ultrafast AI Computations With Extreme Energy Efficiency

Friday, November 29, 2024

- Inside the 'swat Team' -- How Insects React to Virtual Reality Gaming

Tuesday, November 26, 2024

- Researchers Highlight Nobel-Winning AI Breakthroughs and Call for Interdisciplinary Innovation

Monday, November 25, 2024

- The Future of Edge AI: Dye-Sensitized Solar Cell-Based Synaptic Device

Thursday, November 21, 2024

- Scientists Develop Novel High-Fidelity Quantum Computing Gate

- Quantum-Inspired Design Boosts Efficiency of Heat-to-Electricity Conversion

Tuesday, November 19, 2024

- New Ion Speed Record Holds Potential for Faster Battery Charging, Biosensing

- Next Step in Light Microscopy Image Improvement

- New Method of Generating Eco-Friendly Energy

- Incorrect AI Advice Influences Diagnostic Decisions, Study Finds

- AI Needs to Work on Its Conversation Game

Monday, November 18, 2024

- Leaner Large Language Models Could Enable Efficient Local Use on Phones and Laptops

Thursday, November 14, 2024

- AI Headphones Create a 'sound Bubble,' Quieting All Sounds More Than a Few Feet Away

- Scientists Use Math to Predict Crystal Structure in Hours Instead of Months

- AI Method Can Spot Potential Disease Faster, Better Than Humans, Study Finds

Wednesday, November 13, 2024

- How 'clean' Does a Quantum Computing Test Facility Need to Be?

Tuesday, November 12, 2024

- In Step Forward for Quantum Computing Hardware, Physicist Uncovers Novel Behavior in Quantum-Driven Superconductors

- Giving Robots Superhuman Vision Using Radio Signals

Monday, November 11, 2024

- Compact Error Correction: Towards a More Efficient Quantum 'hard Drive'

Friday, November 8, 2024

- New Material to Make Next Generation of Electronics Faster and More Efficient

Thursday, November 7, 2024

- Up to 30% of the Power Used to Train AI Is Wasted: Here's How to Fix It

- Could Crowdsourcing Hold the Key to Early Wildfire Detection?

Tuesday, November 5, 2024

- Despite Its Impressive Output, Generative AI Doesn't Have a Coherent Understanding of the World

- Ensuring a Bright Future for Diamond Electronics and Sensors

- AI-Powered System Detects Toxic Gases With Speed and Precision

Monday, November 4, 2024

- AI for Real-Time, Patient-Focused Insight

- Nanoscale Transistors Could Enable More Efficient Electronics

Thursday, October 31, 2024

- Autistic Traits Shape How We Explore

Wednesday, October 30, 2024

- Smart Sensor Patch Detects Health Symptoms Through Edge Computing

- Quantum Simulator Could Help Uncover Materials for High-Performance Electronics

Monday, October 28, 2024

- Major Development Successes in Diamond Spin Photon Quantum Computers

Thursday, October 24, 2024

- Quantum Experiments and High-Performance Computing: New Methods Enable Complex Calculations to Be Completed Extremely Quickly

Wednesday, October 23, 2024

- Data Security: Breakthrough in Research With Personalized Health Data

- A Multi-Level Breakthrough in Optical Computing

- Listening Skills Bring Human-Like Touch to Robots

Tuesday, October 22, 2024

- Soft Microelectronics Technologies Enabling Wearable AI for Digital Health

Monday, October 21, 2024

- People Hate Stories They Think Were Written by AI: Even If They Were Written by People

- Cloud Computing Captures Chemistry Code

Thursday, October 17, 2024

- New Benchmark Helps Solve the Hardest Quantum Problems

- Controlling Prosthetic Hands More Precisely by the Power of Thought

- Quantum Research Breakthrough Uses Synthetic Dimensions to Efficiently Process Quantum Information

- Material Stimulated by Light Pulses Could Be Leap Toward More Energy-Efficient Supercomputing

Wednesday, October 16, 2024

- New Diamond Bonding Technique a Breakthrough for Quantum Devices

Tuesday, October 15, 2024

- New App Performs Real-Time, Full-Body Motion Capture With a Smartphone

- New Research Reveals How Large-Scale Adoption of Electric Vehicles Can Improve Air Quality and Human Health

- Researchers Develop System Cat's Eye-Inspired Vision for Autonomous Robotics

Thursday, October 10, 2024

- Simulation Mimics How the Brain Grows Neurons, Paving the Way for Future Disease Treatments

Wednesday, October 9, 2024

- Can Advanced AI Can Solve Visual Puzzles and Perform Abstract Reasoning?

- New Technique Could Unlock Potential of Quantum Materials

- New AI Models of Plasma Heating Lead to Important Corrections in Computer Code Used for Fusion Research

- AI-Trained CCTV in Rivers Can Spot Blockages and Reduce Floods

- Nature and Plastics Inspire Breakthrough in Soft Sustainable Materials

- New Breakthrough Helps Free Up Space for Robots to 'think', Say Scientists

Tuesday, October 8, 2024

- New Apps Will Enable Safer Indoor Navigation for Visually Impaired

Monday, October 7, 2024

- AI and Quantum Mechanics Team Up to Accelerate Drug Discovery

- Stopping Off-the-Wall Behavior in Fusion Reactors

Thursday, October 3, 2024

- Quantum Researchers Come Up With a Recipe That Could Accelerate Drug Development

- Logic With Light: Introducing Diffraction Casting, Optical-Based Parallel Computing

- Strong Coupling Between Andreev Qubits Mediated by a Microwave Resonator

- Engineers Create a Chip-Based Tractor Beam for Biological Particles

Monday, September 30, 2024

- Helping Robots Zero in on the Objects That Matter

Thursday, September 26, 2024

- AI Trained on Evolution's Playbook Develops Proteins That Spur Drug and Scientific Discovery

Wednesday, September 25, 2024

- Shrinking AR Displays Into Eyeglasses to Expand Their Use

Tuesday, September 24, 2024

- Language Agents Help Large Language Models 'think' Better and Cheaper

Monday, September 23, 2024

- Paving the Way for New Treatments

Wednesday, September 18, 2024

- Constriction Junction, Do You Function?

Monday, September 16, 2024

- Deep-Learning Innovation Secures Semiconductors Against Counterfeit Chips

Friday, September 13, 2024

- New Discovery Aims to Improve the Design of Microelectronic Devices

- Unveiling the Math Behind Your Calendar

Thursday, September 12, 2024

- Tailored Microbe Communities

- Researchers Discover Building Blocks That Could 'revolutionize Computing'

Wednesday, September 11, 2024

- Smartphone-Based Microscope Rapidly Reconstructs 3D Holograms

Tuesday, September 10, 2024

- Solving a Memristor Mystery to Develop Efficient, Long-Lasting Memory Devices

Monday, September 9, 2024

- New AI Can ID Brain Patterns Related to Specific Behavior

- Electrically Modulated Light Antenna Points the Way to Faster Computer Chips

Tuesday, September 3, 2024

- Keep Devices out of Bed for Better Sleep

Friday, August 30, 2024

- Topological Quantum Simulation Unlocks New Potential in Quantum Computers

Wednesday, August 28, 2024

- Research Cracks the Autism Code, Making the Neurodivergent Brain Visible

Tuesday, August 27, 2024

- A Human-Centered AI Tool to Improve Sepsis Management

Friday, August 23, 2024

- Unconventional Interface Superconductor Could Benefit Quantum Computing

- Artificial Intelligence Improves Lung Cancer Diagnosis

- Toward a Code-Breaking Quantum Computer

Thursday, August 22, 2024

- DNA Tech Offers Both Data Storage and Computing Functions

- Self-Improving AI Method Increases 3D-Printing Efficiency

Wednesday, August 21, 2024

- Beetle That Pushes Dung With the Help of 100 Billion Stars Unlocks the Key to Better Navigation Systems in Drones and Satellites

- Quenching the Intense Heat of a Fusion Plasma May Require a Well-Placed Liquid Metal Evaporator

Tuesday, August 20, 2024

- Adaptive 3D Printing System to Pick and Place Bugs and Other Organisms

- Scientists Harness Quantum Microprocessor Chips for Revolutionary Molecular Spectroscopy Simulation

Monday, August 19, 2024

- Peering Into the Mind of Artificial Intelligence to Make Better Antibiotics

Friday, August 16, 2024

- Detecting Machine-Generated Text: An Arms Race With the Advancements of Large Language Models

Thursday, August 15, 2024

- Robot Planning Tool Accounts for Human Carelessness

- Advancing Modular Quantum Information Processing

Wednesday, August 14, 2024

- New Brain-Computer Interface Allows Man With ALS to 'speak' Again

- Smart Fabric Converts Body Heat Into Electricity

- How Air-Powered Computers Can Prevent Blood Clots

- In Subdivided Communities Cooperative Norms Evolve More Easily

Tuesday, August 13, 2024

- Say 'aah' And Get a Diagnosis on the Spot: Is This the Future of Health?

Monday, August 12, 2024

- New Method for Orchestrating Successful Collaboration Among Robots

- Engineers Make Tunable, Shape-Changing Metamaterial Inspired by Vintage Toys

- LATEST NEWS

- Top Science

- Top Physical/Tech

- Top Environment

- Top Society/Education

- Health & Medicine

- Mind & Brain

- Living Well

- Space & Time

- Matter & Energy

- Computers & Math

- Business & Industry

- Computers and Internet

- Markets and Finance

- Computer Science

- Artificial Intelligence

- Communications

- Computational Biology

- Computer Graphics

- Computer Modeling

- Computer Programming

- Distributed Computing

- Information Technology

- Mobile Computing

- Photography

- Quantum Computers

- Spintronics Research

- Video Games

- Virtual Reality

- Education & Learning

- Educational Technology

- Neural Interfaces

- Mathematics

- Math Puzzles

- Mathematical Modeling

- Plants & Animals

- Earth & Climate

- Fossils & Ruins

- Science & Society

Strange & Offbeat

- Anglerfish's Fishing Rod: Unique Motor Control

- Critical Fertilizer Ingredient from Thin Air

- Heart of Jovian Moon's Volcanic Rage

- Planets Form Through Domino Effect

- Intense Gamma-Ray Outburst: Virgo Constellation

- Light Shed On the Origin of the Genetic Code

- Vastly Improved Carbon Capture Ability

- Real-Time Insight On How New Species Form

- Air Pollution in India: Millions of Deaths

- Overfishing Has Halved Shark and Ray Populations

Trending Topics

computer science Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

Hiring CS Graduates: What We Learned from Employers

Computer science ( CS ) majors are in high demand and account for a large part of national computer and information technology job market applicants. Employment in this sector is projected to grow 12% between 2018 and 2028, which is faster than the average of all other occupations. Published data are available on traditional non-computer science-specific hiring processes. However, the hiring process for CS majors may be different. It is critical to have up-to-date information on questions such as “what positions are in high demand for CS majors?,” “what is a typical hiring process?,” and “what do employers say they look for when hiring CS graduates?” This article discusses the analysis of a survey of 218 recruiters hiring CS graduates in the United States. We used Atlas.ti to analyze qualitative survey data and report the results on what positions are in the highest demand, the hiring process, and the resume review process. Our study revealed that a software developer was the most common job the recruiters were looking to fill. We found that the hiring process steps for CS graduates are generally aligned with traditional hiring steps, with an additional emphasis on technical and coding tests. Recruiters reported that their hiring choices were based on reviewing resume’s experience, GPA, and projects sections. The results provide insights into the hiring process, decision making, resume analysis, and some discrepancies between current undergraduate CS program outcomes and employers’ expectations.

A Systematic Literature Review of Empiricism and Norms of Reporting in Computing Education Research Literature

Context. Computing Education Research (CER) is critical to help the computing education community and policy makers support the increasing population of students who need to learn computing skills for future careers. For a community to systematically advance knowledge about a topic, the members must be able to understand published work thoroughly enough to perform replications, conduct meta-analyses, and build theories. There is a need to understand whether published research allows the CER community to systematically advance knowledge and build theories. Objectives. The goal of this study is to characterize the reporting of empiricism in Computing Education Research literature by identifying whether publications include content necessary for researchers to perform replications, meta-analyses, and theory building. We answer three research questions related to this goal: (RQ1) What percentage of papers in CER venues have some form of empirical evaluation? (RQ2) Of the papers that have empirical evaluation, what are the characteristics of the empirical evaluation? (RQ3) Of the papers that have empirical evaluation, do they follow norms (both for inclusion and for labeling of information needed for replication, meta-analysis, and, eventually, theory-building) for reporting empirical work? Methods. We conducted a systematic literature review of the 2014 and 2015 proceedings or issues of five CER venues: Technical Symposium on Computer Science Education (SIGCSE TS), International Symposium on Computing Education Research (ICER), Conference on Innovation and Technology in Computer Science Education (ITiCSE), ACM Transactions on Computing Education (TOCE), and Computer Science Education (CSE). We developed and applied the CER Empiricism Assessment Rubric to the 427 papers accepted and published at these venues over 2014 and 2015. Two people evaluated each paper using the Base Rubric for characterizing the paper. An individual person applied the other rubrics to characterize the norms of reporting, as appropriate for the paper type. Any discrepancies or questions were discussed between multiple reviewers to resolve. Results. We found that over 80% of papers accepted across all five venues had some form of empirical evaluation. Quantitative evaluation methods were the most frequently reported. Papers most frequently reported results on interventions around pedagogical techniques, curriculum, community, or tools. There was a split in papers that had some type of comparison between an intervention and some other dataset or baseline. Most papers reported related work, following the expectations for doing so in the SIGCSE and CER community. However, many papers were lacking properly reported research objectives, goals, research questions, or hypotheses; description of participants; study design; data collection; and threats to validity. These results align with prior surveys of the CER literature. Conclusions. CER authors are contributing empirical results to the literature; however, not all norms for reporting are met. We encourage authors to provide clear, labeled details about their work so readers can use the study methodologies and results for replications and meta-analyses. As our community grows, our reporting of CER should mature to help establish computing education theory to support the next generation of computing learners.

Light Diacritic Restoration to Disambiguate Homographs in Modern Arabic Texts

Diacritic restoration (also known as diacritization or vowelization) is the process of inserting the correct diacritical markings into a text. Modern Arabic is typically written without diacritics, e.g., newspapers. This lack of diacritical markings often causes ambiguity, and though natives are adept at resolving, there are times they may fail. Diacritic restoration is a classical problem in computer science. Still, as most of the works tackle the full (heavy) diacritization of text, we, however, are interested in diacritizing the text using a fewer number of diacritics. Studies have shown that a fully diacritized text is visually displeasing and slows down the reading. This article proposes a system to diacritize homographs using the least number of diacritics, thus the name “light.” There is a large class of words that fall under the homograph category, and we will be dealing with the class of words that share the spelling but not the meaning. With fewer diacritics, we do not expect any effect on reading speed, while eye strain is reduced. The system contains morphological analyzer and context similarities. The morphological analyzer is used to generate all word candidates for diacritics. Then, through a statistical approach and context similarities, we resolve the homographs. Experimentally, the system shows very promising results, and our best accuracy is 85.6%.

A genre-based analysis of questions and comments in Q&A sessions after conference paper presentations in computer science

Gender diversity in computer science at a large public r1 research university: reporting on a self-study.

With the number of jobs in computer occupations on the rise, there is a greater need for computer science (CS) graduates than ever. At the same time, most CS departments across the country are only seeing 25–30% of women students in their classes, meaning that we are failing to draw interest from a large portion of the population. In this work, we explore the gender gap in CS at Rutgers University–New Brunswick, a large public R1 research university, using three data sets that span thousands of students across six academic years. Specifically, we combine these data sets to study the gender gaps in four core CS courses and explore the correlation of several factors with retention and the impact of these factors on changes to the gender gap as students proceed through the CS courses toward completing the CS major. For example, we find that a significant percentage of women students taking the introductory CS1 course for majors do not intend to major in CS, which may be a contributing factor to a large increase in the gender gap immediately after CS1. This finding implies that part of the retention task is attracting these women students to further explore the major. Results from our study include both novel findings and findings that are consistent with known challenges for increasing gender diversity in CS. In both cases, we provide extensive quantitative data in support of the findings.

Designing for Student-Directedness: How K–12 Teachers Utilize Peers to Support Projects

Student-directed projects—projects in which students have individual control over what they create and how to create it—are a promising practice for supporting the development of conceptual understanding and personal interest in K–12 computer science classrooms. In this article, we explore a central (and perhaps counterintuitive) design principle identified by a group of K–12 computer science teachers who support student-directed projects in their classrooms: in order for students to develop their own ideas and determine how to pursue them, students must have opportunities to engage with other students’ work. In this qualitative study, we investigated the instructional practices of 25 K–12 teachers using a series of in-depth, semi-structured interviews to develop understandings of how they used peer work to support student-directed projects in their classrooms. Teachers described supporting their students in navigating three stages of project development: generating ideas, pursuing ideas, and presenting ideas. For each of these three stages, teachers considered multiple factors to encourage engagement with peer work in their classrooms, including the quality and completeness of shared work and the modes of interaction with the work. We discuss how this pedagogical approach offers students new relationships to their own learning, to their peers, and to their teachers and communicates important messages to students about their own competence and agency, potentially contributing to aims within computer science for broadening participation.

Creativity in CS1: A Literature Review

Computer science is a fast-growing field in today’s digitized age, and working in this industry often requires creativity and innovative thought. An issue within computer science education, however, is that large introductory programming courses often involve little opportunity for creative thinking within coursework. The undergraduate introductory programming course (CS1) is notorious for its poor student performance and retention rates across multiple institutions. Integrating opportunities for creative thinking may help combat this issue by adding a personal touch to course content, which could allow beginner CS students to better relate to the abstract world of programming. Research on the role of creativity in computer science education (CSE) is an interesting area with a lot of room for exploration due to the complexity of the phenomenon of creativity as well as the CSE research field being fairly new compared to some other education fields where this topic has been more closely explored. To contribute to this area of research, this article provides a literature review exploring the concept of creativity as relevant to computer science education and CS1 in particular. Based on the review of the literature, we conclude creativity is an essential component to computer science, and the type of creativity that computer science requires is in fact, a teachable skill through the use of various tools and strategies. These strategies include the integration of open-ended assignments, large collaborative projects, learning by teaching, multimedia projects, small creative computational exercises, game development projects, digitally produced art, robotics, digital story-telling, music manipulation, and project-based learning. Research on each of these strategies and their effects on student experiences within CS1 is discussed in this review. Last, six main components of creativity-enhancing activities are identified based on the studies about incorporating creativity into CS1. These components are as follows: Collaboration, Relevance, Autonomy, Ownership, Hands-On Learning, and Visual Feedback. The purpose of this article is to contribute to computer science educators’ understanding of how creativity is best understood in the context of computer science education and explore practical applications of creativity theory in CS1 classrooms. This is an important collection of information for restructuring aspects of future introductory programming courses in creative, innovative ways that benefit student learning.

CATS: Customizable Abstractive Topic-based Summarization

Neural sequence-to-sequence models are the state-of-the-art approach used in abstractive summarization of textual documents, useful for producing condensed versions of source text narratives without being restricted to using only words from the original text. Despite the advances in abstractive summarization, custom generation of summaries (e.g., towards a user’s preference) remains unexplored. In this article, we present CATS, an abstractive neural summarization model that summarizes content in a sequence-to-sequence fashion while also introducing a new mechanism to control the underlying latent topic distribution of the produced summaries. We empirically illustrate the efficacy of our model in producing customized summaries and present findings that facilitate the design of such systems. We use the well-known CNN/DailyMail dataset to evaluate our model. Furthermore, we present a transfer-learning method and demonstrate the effectiveness of our approach in a low resource setting, i.e., abstractive summarization of meetings minutes, where combining the main available meetings’ transcripts datasets, AMI and International Computer Science Institute(ICSI) , results in merely a few hundred training documents.

Exploring students’ and lecturers’ views on collaboration and cooperation in computer science courses - a qualitative analysis

Factors affecting student educational choices regarding oer material in computer science, export citation format, share document.

Suggestions or feedback?

MIT News | Massachusetts Institute of Technology

- Machine learning

- Sustainability

- Black holes

- Classes and programs

Departments

- Aeronautics and Astronautics

- Brain and Cognitive Sciences

- Architecture

- Political Science

- Mechanical Engineering

Centers, Labs, & Programs

- Abdul Latif Jameel Poverty Action Lab (J-PAL)

- Picower Institute for Learning and Memory

- Lincoln Laboratory

- School of Architecture + Planning

- School of Engineering

- School of Humanities, Arts, and Social Sciences

- Sloan School of Management

- School of Science

- MIT Schwarzman College of Computing

Computer science and technology

Download RSS feed: News Articles / In the Media / Audio

Teaching a robot its limits, to complete open-ended tasks safely

The “PRoC3S” method helps an LLM create a viable action plan by testing each step in a simulation. This strategy could eventually aid in-home robots to complete more ambiguous chore requests.

December 12, 2024

Read full story →

AI in health should be regulated, but don’t forget about the algorithms, researchers say

In a recent commentary, a team from MIT, Equality AI, and Boston University highlights the gaps in regulation for AI models and non-AI algorithms in health care.

Researchers reduce bias in AI models while preserving or improving accuracy

A new technique identifies and removes the training examples that contribute most to a machine-learning model’s failures.

December 11, 2024

Study: Some language reward models exhibit political bias

Research from the MIT Center for Constructive Communication finds this effect occurs even when reward models are trained on factual data.

December 10, 2024

Enabling AI to explain its predictions in plain language

Using LLMs to convert machine-learning explanations into readable narratives could help users make better decisions about when to trust a model.

Daniela Rus wins John Scott Award

MIT CSAIL director and EECS professor named a co-recipient of the honor for her robotics research, which has expanded our understanding of what a robot can be.

December 9, 2024

Citation tool offers a new approach to trustworthy AI-generated content

Researchers develop “ContextCite,” an innovative method to track AI’s source attribution and detect potential misinformation.

A new way to create realistic 3D shapes using generative AI

Researchers propose a simple fix to an existing technique that could help artists, designers, and engineers create better 3D models.

December 4, 2024

Photonic processor could enable ultrafast AI computations with extreme energy efficiency

This new device uses light to perform the key operations of a deep neural network on a chip, opening the door to high-speed processors that can learn in real-time.

December 2, 2024

Improving health, one machine learning system at a time

Marzyeh Ghassemi works to ensure health-care models are trained to be robust and fair.

November 25, 2024

MIT researchers develop an efficient way to train more reliable AI agents

The technique could make AI systems better at complex tasks that involve variability.

November 22, 2024

Advancing urban tree monitoring with AI-powered digital twins

The Tree-D Fusion system integrates generative AI and genus-conditioned algorithms to create precise simulation-ready models of 600,000 existing urban trees across North America.

November 21, 2024

Can robots learn from machine dreams?

MIT CSAIL researchers used AI-generated images to train a robot dog in parkour, without real-world data. Their LucidSim system demonstrates generative AI's potential for creating robotics training data.

November 19, 2024

Four from MIT named 2025 Rhodes Scholars

Yiming Chen ’24, Wilhem Hector, Anushka Nair, and David Oluigbo will start postgraduate studies at Oxford next fall.

November 16, 2024

Graph-based AI model maps the future of innovation

An AI method developed by Professor Markus Buehler finds hidden links between science and art to suggest novel materials.

November 12, 2024

Massachusetts Institute of Technology 77 Massachusetts Avenue, Cambridge, MA, USA

- Map (opens in new window)

- Events (opens in new window)

- People (opens in new window)

- Careers (opens in new window)

- Accessibility

- Social Media Hub

- MIT on Facebook

- MIT on YouTube

- MIT on Instagram

Subscribe to the PwC Newsletter

Join the community, trending research, mossformer2: combining transformer and rnn-free recurrent network for enhanced time-domain monaural speech separation.

Instead of applying the recurrent neural networks (RNNs) that use traditional recurrent connections, we present a recurrent module based on a feedforward sequential memory network (FSMN), which is considered "RNN-free" recurrent network due to the ability to capture recurrent patterns without using recurrent connections.

RoboMM: All-in-One Multimodal Large Model for Robotic Manipulation

In recent years, robotics has advanced significantly through the integration of larger models and large-scale datasets.

Robotics Multimedia

Parsing Millions of DNS Records per Second

nlnetlabs/simdzone • 18 Nov 2024

The Domain Name System (DNS) plays a critical role in the functioning of the Internet.

Data Structures and Algorithms

Fish-Speech: Leveraging Large Language Models for Advanced Multilingual Text-to-Speech Synthesis

Text-to-Speech (TTS) systems face ongoing challenges in processing complex linguistic features, handling polyphonic expressions, and producing natural-sounding multilingual speech - capabilities that are crucial for future AI applications.

Sound Audio and Speech Processing

NormalFlow: Fast, Robust, and Accurate Contact-based Object 6DoF Pose Tracking with Vision-based Tactile Sensors

rpl-cmu/normalflow • 12 Dec 2024

Tactile sensing is crucial for robots aiming to achieve human-level dexterity.

HLSPilot: LLM-based High-Level Synthesis

xcw-1010/hlspilot • 13 Aug 2024

Large language models (LLMs) have catalyzed an upsurge in automatic code generation, garnering significant attention for register transfer level (RTL) code generation.

Hardware Architecture

InterHub: A Naturalistic Trajectory Dataset with Dense Interaction for Autonomous Driving

zxc-tju/InterHub • 27 Nov 2024

The driving interaction-a critical yet complex aspect of daily driving-lies at the core of autonomous driving research.

Empowering Robot Path Planning with Large Language Models: osmAG Map Topology & Hierarchy Comprehension with LLMs

Large Language Models (LLMs) have demonstrated great potential in robotic applications by providing essential general knowledge.

Collision-Affording Point Trees: SIMD-Amenable Nearest Neighbors for Fast Collision Checking

KavrakiLab/vamp • 4 Jun 2024

Motion planning against sensor data is often a critical bottleneck in real-time robot control.

Preliminary Investigation into Data Scaling Laws for Imitation Learning-Based End-to-End Autonomous Driving

ucaszyp/driving-scaling-law • 3 Dec 2024

Through experimental analysis, we discovered that (1) the performance of the driving model exhibits a power-law relationship with the amount of training data; (2) a small increase in the quantity of long-tailed data can significantly improve the performance for the corresponding scenarios; (3) appropriate scaling of data enables the model to achieve combinatorial generalization in novel scenes and actions.

Center for Security and Emerging Technology

Staying Current with Emerging Technology Trends: Using Big Data to Inform Planning

Emelia Probasco

and Christian Schoeberl

This report proposes an approach to systematically identify promising research using big data and analyze that research’s potential impact through structured engagements with subject-matter experts. The methodology offers a structured way to proactively monitor the research landscape and inform strategic R&D priorities.

Introduction

Decision-makers today are pressed to stay ahead of the tsunami of new science and technology research. Many hope that big data and artificial intelligence (AI) will help identify research evolutions and revolutions in real time, or even before they happen. As we will discuss below, data alone cannot predict scientific revolutions. Examining data to stay current with, or slightly ahead of, new technologies, however, is still valuable.

This paper proposes a human-machine teaming approach to systematically identify research developments for an organization. First, our approach starts by identifying papers that the organization has authored. Second, we use those papers to find research clusters in the Center for Security and Emerging Technology (CSET) Map of Science, which displays global academic literature clustered according to citation patterns. Third, we select a subset of clusters based on metadata that we believe indicates important research activity. Fourth, we share the selected clusters with subject matter experts (SMEs) and facilitate a discussion about the research and its potential impact for the organization.

We describe each of these steps in detail in the sections that follow and use a proof-ofconcept experiment to evaluate our approach.

This paper is intended for individuals developing research or investment portfolios and priorities within their organizations. It should also be useful to SMEs interested in exploring or revealing research to which they may not otherwise be exposed as a consequence of increasing specialization.

Download Full Report

Related content, cset map of science.

The Emerging Technology Observatory’s Map of Science collects and organizes the world’s research literature, revealing key trends, hotspots, and concepts in global science and technology. Read More

Identifying AI Research

The choice of method for surfacing AI-relevant publications impacts the ultimate research findings. This report provides a quantitative analysis of various methods available to researchers for identifying AI-relevant research within CSET’s merged corpus, and showcases… Read More

Analyzing the Directionality of Citations in the Map of Science

Data Snapshots are informative descriptions and quick analyses that dig into CSET’s unique data resources. This three-part series presents a method to explore and visualize connections across CSET’s research clusters and enable identification of research… Read More

Locating AI Research in the Map of Science

Data Snapshots are informative descriptions and quick analyses that dig into CSET’s unique data resources. Our first series of Snapshots introduced CSET’s Map of Science and explored the underlying data and analytic utility of this… Read More

This website uses cookies.

Privacy overview.

IMAGES

VIDEO

COMMENTS

Computer science is the study and development of the protocols required for automated processing and manipulation of data. This includes, for example, creating algorithms for efficiently searching ...

Relevance: This area bridges computer science and biology, offering solutions in healthcare and biotechnology. Emerging Research Topics in Computer Science. Green Computing: Optimizing energy use in data centers and devices to reduce environmental impact. AI Ethics: Addressing bias, fairness, and accountability in AI systems.

Computer Science. Read all the latest developments in the computer sciences including articles on new software, hardware and systems.

Browse the latest research papers on computer science topics from various journals and sources. Find out the hiring process, empiricism, and diacritic restoration for CS graduates and learners.

Topic Computer science and technology. Download RSS feed: News Articles / In the Media / Audio. ... Research from the MIT Center for Constructive Communication finds this effect occurs even when reward models are trained on factual data. ... This new device uses light to perform the key operations of a deep neural network on a chip, opening the ...

Papers With Code highlights trending Computer Science research and the code to implement it. Papers With Code highlights trending Computer Science research and the code to implement it. ... (HDP), a new imitation learning method of using objective contacts to guide the generation of robot trajectories. Robotics. 18. 0.13 stars / hour

New computer science technologies include innovations in artificial intelligence, data analytics, machine learning, virtual and augmented reality, UI/UX design, and quantum computing. ... and computer and information research scientist jobs will each grow more than 20% between 2022 and 2032 — much faster than the national projected growth for ...

All the latest science news on computer science from Phys.org. Find the latest news, advancements, and breakthroughs. ... Related topics: ... A research team has made a breakthrough in ...

Computer Science; Topics; Archive; Saved Articles. Create a reading list by clicking the Read Later icon next to the articles you wish to save. See all saved articles. Log out ... Researchers are exploring new ways that quantum computers will be able to reveal the secrets of complex quantum systems.

Decision-makers today are pressed to stay ahead of the tsunami of new science and technology research. Many hope that big data and artificial intelligence (AI) will help identify research evolutions and revolutions in real time, or even before they happen. As we will discuss below, data alone cannot predict scientific revolutions.