5 Quasi-Experimental Design Examples

Dave Cornell (PhD)

Dr. Cornell has worked in education for more than 20 years. His work has involved designing teacher certification for Trinity College in London and in-service training for state governments in the United States. He has trained kindergarten teachers in 8 countries and helped businessmen and women open baby centers and kindergartens in 3 countries.

Learn about our Editorial Process

Chris Drew (PhD)

This article was peer-reviewed and edited by Chris Drew (PhD). The review process on Helpful Professor involves having a PhD level expert fact check, edit, and contribute to articles. Reviewers ensure all content reflects expert academic consensus and is backed up with reference to academic studies. Dr. Drew has published over 20 academic articles in scholarly journals. He is the former editor of the Journal of Learning Development in Higher Education and holds a PhD in Education from ACU.

Quasi-experimental design refers to a type of experimental design that uses pre-existing groups of people rather than random groups.

Because the groups of research participants already exist, they cannot be randomly assigned to a cohort . This makes inferring a causal relationship between the treatment and observed/criterion variable difficult.

Quasi-experimental designs are generally considered inferior to true experimental designs.

Limitations of Quasi-Experimental Design

Since participants cannot be randomly assigned to the grouping variable (male/female; high education/low education), the internal validity of the study is questionable.

Extraneous variables may exist that explain the results. For example, with quasi-experimental studies involving gender, there are numerous cultural and biological variables that distinguish males and females other than gender alone.

Each one of those variables may be able to explain the results without the need to refer to gender.

See More Research Limitations Here

Quasi-Experimental Design Examples

1. smartboard apps and math.

A school has decided to supplement their math resources with smartboard applications. The math teachers research the apps available and then choose two apps for each grade level. Before deciding on which apps to purchase, the school contacts the seller and asks for permission to demo/test the apps before purchasing the licenses.

The study involves having different teachers use the apps with their classes. Since there are two math teachers at each grade level, each teacher will use one of the apps in their classroom for three months. At the end of three months, all students will take the same math exams. Then the school can simply compare which app improved the students’ math scores the most.

The reason this is called a quasi-experiment is because the school did not randomly assign students to one app or the other. The students were already in pre-existing groups/classes.

Although it was impractical to randomly assign students to use one version or the other of the apps, it creates difficulty interpreting the results.

For instance, if students in teacher A’s class did better than the students in teacher B’s class, then can we really say the difference was due to the app? There may be other differences between the two teachers that account for the results. This poses a serious threat to the study’s internal validity.

2. Leadership Training

There is reason to believe that teaching entrepreneurs modern leadership techniques will improve their performance and shorten how long it takes for them to reach profitability. Team members will feel better appreciated and work harder, which should translate to increased productivity and innovation.

This hypothetical study took place in a third-world country in a mid-sized city. The researchers marketed the training throughout the city and received interest from 5 start-ups in the tech sector and 5 in the textile industry. The leaders of each company then attended six weeks of workshops on employee motivation, leadership styles, and effective team management.

At the end of one year, the researchers returned. They conducted a standard assessment of each start-up’s growth trajectory and administered various surveys to employees.

The results indicated that tech start-ups were further along in their growth paths than textile start-ups. The data also showed that tech work teams reported greater job satisfaction and company loyalty than textile work teams.

Although the results appear straightforward, because the researchers used a quasi-experimental design, they cannot say that the training caused the results.

The two groups may differ in ways that could explain the results. For instance, perhaps there is less growth potential in the textile industry in that city, or perhaps tech leaders are more progressive and willing to accept new leadership strategies.

3. Parenting Styles and Academic Performance

Psychologists are very interested in factors that affect children’s academic performance. Since parenting styles affect a variety of children’s social and emotional profiles, it stands to reason that it may affect academic performance as well. The four parenting styles under study are: authoritarian, authoritative, permissive, and neglectful/uninvolved.

To examine this possible relationship, researchers assessed the parenting style of 120 families with third graders in a large metropolitan city. Trained raters made two-hour home visits to conduct observations of parent/child interactions. That data was later compared with the children’s grades.

The results revealed that children raised in authoritative households had the highest grades of all the groups.

However, because the researchers were not able to randomly assign children to one of the four parenting styles, the internal validity is called into question.

There may be other explanations for the results other than parenting style. For instance, maybe parents that practice authoritative parenting also come from a higher SES demographic than the other parents.

Because they have higher income and education levels, they may put more emphasis on their child’s academic performance. Or, because they have greater financial resources, their children attend STEM camps, co-curricular and other extracurricular academic-orientated classes.

4. Government Reforms and Economic Impact

Government policies can have a tremendous impact on economic development. Making it easier for small businesses to open and reducing bank loans are examples of policies that can have immediate results. So, a third-world country decides to test policy reforms in two mid-sized cities. One city receives reforms directed at small businesses, while the other receives reforms directed at luring foreign investment.

The government was careful to choose two cities that were similar in terms of size and population demographics.

Over the next five years, economic growth data were collected at the end of each fiscal year. The measures consisted of housing sells, local GDP, and unemployment rates.

At the end of five years the results indicated that small business reforms had a much larger impact on economic growth than foreign investment. The city which received small business reforms saw an increase in housing sells and GDP, but a drop in unemployment. The other city saw stagnant sells and GDP, and a slight increase in unemployment.

On the surface, it appears that small business reform is the better way to go. However, a more careful analysis revealed that the economic improvement observed in the one city was actually the result of two multinational real estate firms entering the market. The two firms specialize in converting dilapidated warehouses into shopping centers and residential properties.

5. Gender and Meditation

Meditation can help relieve stress and reduce symptoms of depression and anxiety. It is a simple and easy to use technique that just about anyone can try. However, are the benefits real or is it just that people believe it can help? To find out, a team of counselors designed a study to put it to a test.

Since they believe that women are more likely to benefit than men, they recruit both males and females to be in their study.

Both groups were trained in meditation by a licensed professional. The training took place over three weekends. Participants were instructed to practice at home at least four times a week for the next three months and keep a journal each time they meditate.

At the end of the three months, physical and psychological health data were collected on all participants. For physical health, participants’ blood pressure was measured. For psychological health, participants filled out a happiness scale and the emotional tone of their diaries were examined.

The results showed that meditation worked better for women than men. Women had lower blood pressure, scored higher on the happiness scale, and wrote more positive statements in their diaries.

Unfortunately, the researchers noticed that men apparently did not actually practice meditation as much as they should. They had very few journal entries and in post-study interviews, a vast majority of men admitted that they only practiced meditation about half the time.

The lack of practice is an extraneous variable. Perhaps if men had adhered to the study instructions, their scores on the physical and psychological measures would have been higher than women’s measures.

The quasi-experiment is used when researchers want to study the effects of a variable/treatment on different groups of people. Groups can be defined based on gender, parenting style, SES demographics, or any number of other variables.

The problem is that when interpreting the results, even clear differences between the groups cannot be attributed to the treatment.

The groups may differ in ways other than the grouping variables. For example, leadership training in the study above may have improved the textile start-ups’ performance if the techniques had been applied at all. Similarly, men may have benefited from meditation as much as women, if they had just tried.

Baumrind, D. (1991). Parenting styles and adolescent development. In R. M. Lerner, A. C. Peterson, & J. Brooks-Gunn (Eds.), Encyclopedia of Adolescence (pp. 746–758). New York: Garland Publishing, Inc.

Cook, T. D., & Campbell, D. T. (1979). Quasi-experimentation: Design & analysis issues in field settings . Boston, MA: Houghton Mifflin.

Matthew L. Maciejewski (2020) Quasi-experimental design. Biostatistics & Epidemiology, 4 (1), 38-47. https://doi.org/10.1080/24709360.2018.1477468

Thyer, Bruce. (2012). Quasi-Experimental Research Designs . Oxford University Press. https://doi.org/10.1093/acprof:oso/9780195387384.001.0001

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 23 Achieved Status Examples

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 25 Defense Mechanisms Examples

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 15 Theory of Planned Behavior Examples

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 18 Adaptive Behavior Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd-2/ 23 Achieved Status Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd-2/ 15 Ableism Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd-2/ 25 Defense Mechanisms Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd-2/ 15 Theory of Planned Behavior Examples

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

7.3 Quasi-Experimental Research

Learning objectives.

- Explain what quasi-experimental research is and distinguish it clearly from both experimental and correlational research.

- Describe three different types of quasi-experimental research designs (nonequivalent groups, pretest-posttest, and interrupted time series) and identify examples of each one.

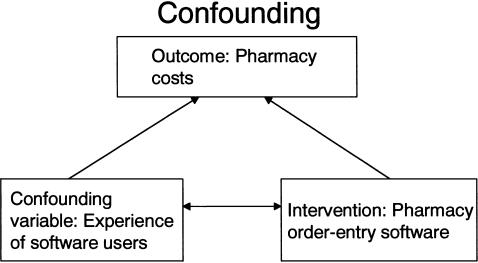

The prefix quasi means “resembling.” Thus quasi-experimental research is research that resembles experimental research but is not true experimental research. Although the independent variable is manipulated, participants are not randomly assigned to conditions or orders of conditions (Cook & Campbell, 1979). Because the independent variable is manipulated before the dependent variable is measured, quasi-experimental research eliminates the directionality problem. But because participants are not randomly assigned—making it likely that there are other differences between conditions—quasi-experimental research does not eliminate the problem of confounding variables. In terms of internal validity, therefore, quasi-experiments are generally somewhere between correlational studies and true experiments.

Quasi-experiments are most likely to be conducted in field settings in which random assignment is difficult or impossible. They are often conducted to evaluate the effectiveness of a treatment—perhaps a type of psychotherapy or an educational intervention. There are many different kinds of quasi-experiments, but we will discuss just a few of the most common ones here.

Nonequivalent Groups Design

Recall that when participants in a between-subjects experiment are randomly assigned to conditions, the resulting groups are likely to be quite similar. In fact, researchers consider them to be equivalent. When participants are not randomly assigned to conditions, however, the resulting groups are likely to be dissimilar in some ways. For this reason, researchers consider them to be nonequivalent. A nonequivalent groups design , then, is a between-subjects design in which participants have not been randomly assigned to conditions.

Imagine, for example, a researcher who wants to evaluate a new method of teaching fractions to third graders. One way would be to conduct a study with a treatment group consisting of one class of third-grade students and a control group consisting of another class of third-grade students. This would be a nonequivalent groups design because the students are not randomly assigned to classes by the researcher, which means there could be important differences between them. For example, the parents of higher achieving or more motivated students might have been more likely to request that their children be assigned to Ms. Williams’s class. Or the principal might have assigned the “troublemakers” to Mr. Jones’s class because he is a stronger disciplinarian. Of course, the teachers’ styles, and even the classroom environments, might be very different and might cause different levels of achievement or motivation among the students. If at the end of the study there was a difference in the two classes’ knowledge of fractions, it might have been caused by the difference between the teaching methods—but it might have been caused by any of these confounding variables.

Of course, researchers using a nonequivalent groups design can take steps to ensure that their groups are as similar as possible. In the present example, the researcher could try to select two classes at the same school, where the students in the two classes have similar scores on a standardized math test and the teachers are the same sex, are close in age, and have similar teaching styles. Taking such steps would increase the internal validity of the study because it would eliminate some of the most important confounding variables. But without true random assignment of the students to conditions, there remains the possibility of other important confounding variables that the researcher was not able to control.

Pretest-Posttest Design

In a pretest-posttest design , the dependent variable is measured once before the treatment is implemented and once after it is implemented. Imagine, for example, a researcher who is interested in the effectiveness of an antidrug education program on elementary school students’ attitudes toward illegal drugs. The researcher could measure the attitudes of students at a particular elementary school during one week, implement the antidrug program during the next week, and finally, measure their attitudes again the following week. The pretest-posttest design is much like a within-subjects experiment in which each participant is tested first under the control condition and then under the treatment condition. It is unlike a within-subjects experiment, however, in that the order of conditions is not counterbalanced because it typically is not possible for a participant to be tested in the treatment condition first and then in an “untreated” control condition.

If the average posttest score is better than the average pretest score, then it makes sense to conclude that the treatment might be responsible for the improvement. Unfortunately, one often cannot conclude this with a high degree of certainty because there may be other explanations for why the posttest scores are better. One category of alternative explanations goes under the name of history . Other things might have happened between the pretest and the posttest. Perhaps an antidrug program aired on television and many of the students watched it, or perhaps a celebrity died of a drug overdose and many of the students heard about it. Another category of alternative explanations goes under the name of maturation . Participants might have changed between the pretest and the posttest in ways that they were going to anyway because they are growing and learning. If it were a yearlong program, participants might become less impulsive or better reasoners and this might be responsible for the change.

Another alternative explanation for a change in the dependent variable in a pretest-posttest design is regression to the mean . This refers to the statistical fact that an individual who scores extremely on a variable on one occasion will tend to score less extremely on the next occasion. For example, a bowler with a long-term average of 150 who suddenly bowls a 220 will almost certainly score lower in the next game. Her score will “regress” toward her mean score of 150. Regression to the mean can be a problem when participants are selected for further study because of their extreme scores. Imagine, for example, that only students who scored especially low on a test of fractions are given a special training program and then retested. Regression to the mean all but guarantees that their scores will be higher even if the training program has no effect. A closely related concept—and an extremely important one in psychological research—is spontaneous remission . This is the tendency for many medical and psychological problems to improve over time without any form of treatment. The common cold is a good example. If one were to measure symptom severity in 100 common cold sufferers today, give them a bowl of chicken soup every day, and then measure their symptom severity again in a week, they would probably be much improved. This does not mean that the chicken soup was responsible for the improvement, however, because they would have been much improved without any treatment at all. The same is true of many psychological problems. A group of severely depressed people today is likely to be less depressed on average in 6 months. In reviewing the results of several studies of treatments for depression, researchers Michael Posternak and Ivan Miller found that participants in waitlist control conditions improved an average of 10 to 15% before they received any treatment at all (Posternak & Miller, 2001). Thus one must generally be very cautious about inferring causality from pretest-posttest designs.

Does Psychotherapy Work?

Early studies on the effectiveness of psychotherapy tended to use pretest-posttest designs. In a classic 1952 article, researcher Hans Eysenck summarized the results of 24 such studies showing that about two thirds of patients improved between the pretest and the posttest (Eysenck, 1952). But Eysenck also compared these results with archival data from state hospital and insurance company records showing that similar patients recovered at about the same rate without receiving psychotherapy. This suggested to Eysenck that the improvement that patients showed in the pretest-posttest studies might be no more than spontaneous remission. Note that Eysenck did not conclude that psychotherapy was ineffective. He merely concluded that there was no evidence that it was, and he wrote of “the necessity of properly planned and executed experimental studies into this important field” (p. 323). You can read the entire article here:

http://psychclassics.yorku.ca/Eysenck/psychotherapy.htm

Fortunately, many other researchers took up Eysenck’s challenge, and by 1980 hundreds of experiments had been conducted in which participants were randomly assigned to treatment and control conditions, and the results were summarized in a classic book by Mary Lee Smith, Gene Glass, and Thomas Miller (Smith, Glass, & Miller, 1980). They found that overall psychotherapy was quite effective, with about 80% of treatment participants improving more than the average control participant. Subsequent research has focused more on the conditions under which different types of psychotherapy are more or less effective.

In a classic 1952 article, researcher Hans Eysenck pointed out the shortcomings of the simple pretest-posttest design for evaluating the effectiveness of psychotherapy.

Wikimedia Commons – CC BY-SA 3.0.

Interrupted Time Series Design

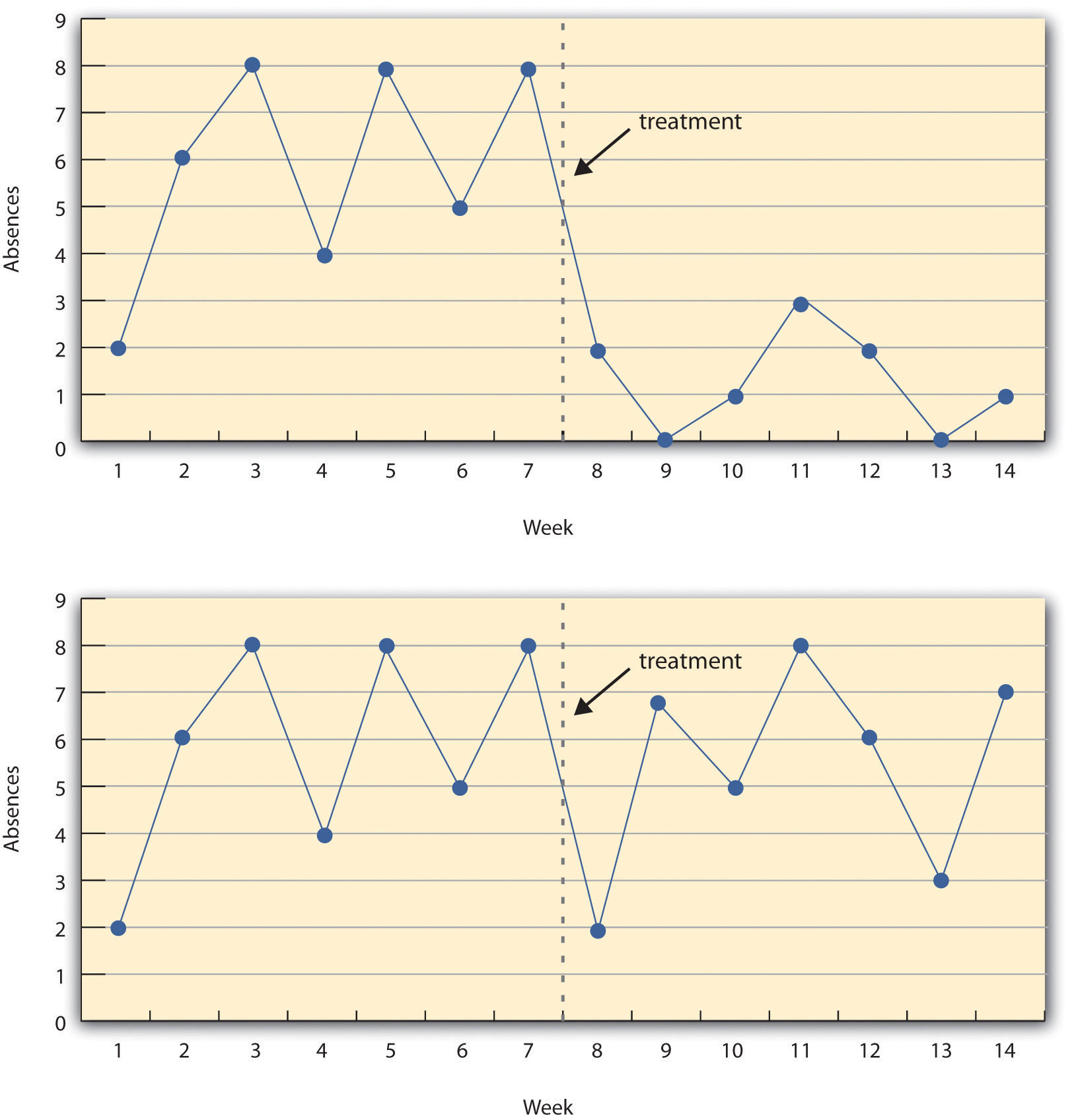

A variant of the pretest-posttest design is the interrupted time-series design . A time series is a set of measurements taken at intervals over a period of time. For example, a manufacturing company might measure its workers’ productivity each week for a year. In an interrupted time series-design, a time series like this is “interrupted” by a treatment. In one classic example, the treatment was the reduction of the work shifts in a factory from 10 hours to 8 hours (Cook & Campbell, 1979). Because productivity increased rather quickly after the shortening of the work shifts, and because it remained elevated for many months afterward, the researcher concluded that the shortening of the shifts caused the increase in productivity. Notice that the interrupted time-series design is like a pretest-posttest design in that it includes measurements of the dependent variable both before and after the treatment. It is unlike the pretest-posttest design, however, in that it includes multiple pretest and posttest measurements.

Figure 7.5 “A Hypothetical Interrupted Time-Series Design” shows data from a hypothetical interrupted time-series study. The dependent variable is the number of student absences per week in a research methods course. The treatment is that the instructor begins publicly taking attendance each day so that students know that the instructor is aware of who is present and who is absent. The top panel of Figure 7.5 “A Hypothetical Interrupted Time-Series Design” shows how the data might look if this treatment worked. There is a consistently high number of absences before the treatment, and there is an immediate and sustained drop in absences after the treatment. The bottom panel of Figure 7.5 “A Hypothetical Interrupted Time-Series Design” shows how the data might look if this treatment did not work. On average, the number of absences after the treatment is about the same as the number before. This figure also illustrates an advantage of the interrupted time-series design over a simpler pretest-posttest design. If there had been only one measurement of absences before the treatment at Week 7 and one afterward at Week 8, then it would have looked as though the treatment were responsible for the reduction. The multiple measurements both before and after the treatment suggest that the reduction between Weeks 7 and 8 is nothing more than normal week-to-week variation.

Figure 7.5 A Hypothetical Interrupted Time-Series Design

The top panel shows data that suggest that the treatment caused a reduction in absences. The bottom panel shows data that suggest that it did not.

Combination Designs

A type of quasi-experimental design that is generally better than either the nonequivalent groups design or the pretest-posttest design is one that combines elements of both. There is a treatment group that is given a pretest, receives a treatment, and then is given a posttest. But at the same time there is a control group that is given a pretest, does not receive the treatment, and then is given a posttest. The question, then, is not simply whether participants who receive the treatment improve but whether they improve more than participants who do not receive the treatment.

Imagine, for example, that students in one school are given a pretest on their attitudes toward drugs, then are exposed to an antidrug program, and finally are given a posttest. Students in a similar school are given the pretest, not exposed to an antidrug program, and finally are given a posttest. Again, if students in the treatment condition become more negative toward drugs, this could be an effect of the treatment, but it could also be a matter of history or maturation. If it really is an effect of the treatment, then students in the treatment condition should become more negative than students in the control condition. But if it is a matter of history (e.g., news of a celebrity drug overdose) or maturation (e.g., improved reasoning), then students in the two conditions would be likely to show similar amounts of change. This type of design does not completely eliminate the possibility of confounding variables, however. Something could occur at one of the schools but not the other (e.g., a student drug overdose), so students at the first school would be affected by it while students at the other school would not.

Finally, if participants in this kind of design are randomly assigned to conditions, it becomes a true experiment rather than a quasi experiment. In fact, it is the kind of experiment that Eysenck called for—and that has now been conducted many times—to demonstrate the effectiveness of psychotherapy.

Key Takeaways

- Quasi-experimental research involves the manipulation of an independent variable without the random assignment of participants to conditions or orders of conditions. Among the important types are nonequivalent groups designs, pretest-posttest, and interrupted time-series designs.

- Quasi-experimental research eliminates the directionality problem because it involves the manipulation of the independent variable. It does not eliminate the problem of confounding variables, however, because it does not involve random assignment to conditions. For these reasons, quasi-experimental research is generally higher in internal validity than correlational studies but lower than true experiments.

- Practice: Imagine that two college professors decide to test the effect of giving daily quizzes on student performance in a statistics course. They decide that Professor A will give quizzes but Professor B will not. They will then compare the performance of students in their two sections on a common final exam. List five other variables that might differ between the two sections that could affect the results.

Discussion: Imagine that a group of obese children is recruited for a study in which their weight is measured, then they participate for 3 months in a program that encourages them to be more active, and finally their weight is measured again. Explain how each of the following might affect the results:

- regression to the mean

- spontaneous remission

Cook, T. D., & Campbell, D. T. (1979). Quasi-experimentation: Design & analysis issues in field settings . Boston, MA: Houghton Mifflin.

Eysenck, H. J. (1952). The effects of psychotherapy: An evaluation. Journal of Consulting Psychology, 16 , 319–324.

Posternak, M. A., & Miller, I. (2001). Untreated short-term course of major depression: A meta-analysis of studies using outcomes from studies using wait-list control groups. Journal of Affective Disorders, 66 , 139–146.

Smith, M. L., Glass, G. V., & Miller, T. I. (1980). The benefits of psychotherapy . Baltimore, MD: Johns Hopkins University Press.

Research Methods in Psychology Copyright © 2016 by University of Minnesota is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Quasi-Experimental Design: Definition, Types, Examples

Appinio Research · 19.12.2023 · 37min read

Ever wondered how researchers uncover cause-and-effect relationships in the real world, where controlled experiments are often elusive? Quasi-experimental design holds the key. In this guide, we'll unravel the intricacies of quasi-experimental design, shedding light on its definition, purpose, and applications across various domains. Whether you're a student, a professional, or simply curious about the methods behind meaningful research, join us as we delve into the world of quasi-experimental design, making complex concepts sound simple and embarking on a journey of knowledge and discovery.

What is Quasi-Experimental Design?

Quasi-experimental design is a research methodology used to study the effects of independent variables on dependent variables when full experimental control is not possible or ethical. It falls between controlled experiments, where variables are tightly controlled, and purely observational studies, where researchers have little control over variables. Quasi-experimental design mimics some aspects of experimental research but lacks randomization.

The primary purpose of quasi-experimental design is to investigate cause-and-effect relationships between variables in real-world settings. Researchers use this approach to answer research questions, test hypotheses, and explore the impact of interventions or treatments when they cannot employ traditional experimental methods. Quasi-experimental studies aim to maximize internal validity and make meaningful inferences while acknowledging practical constraints and ethical considerations.

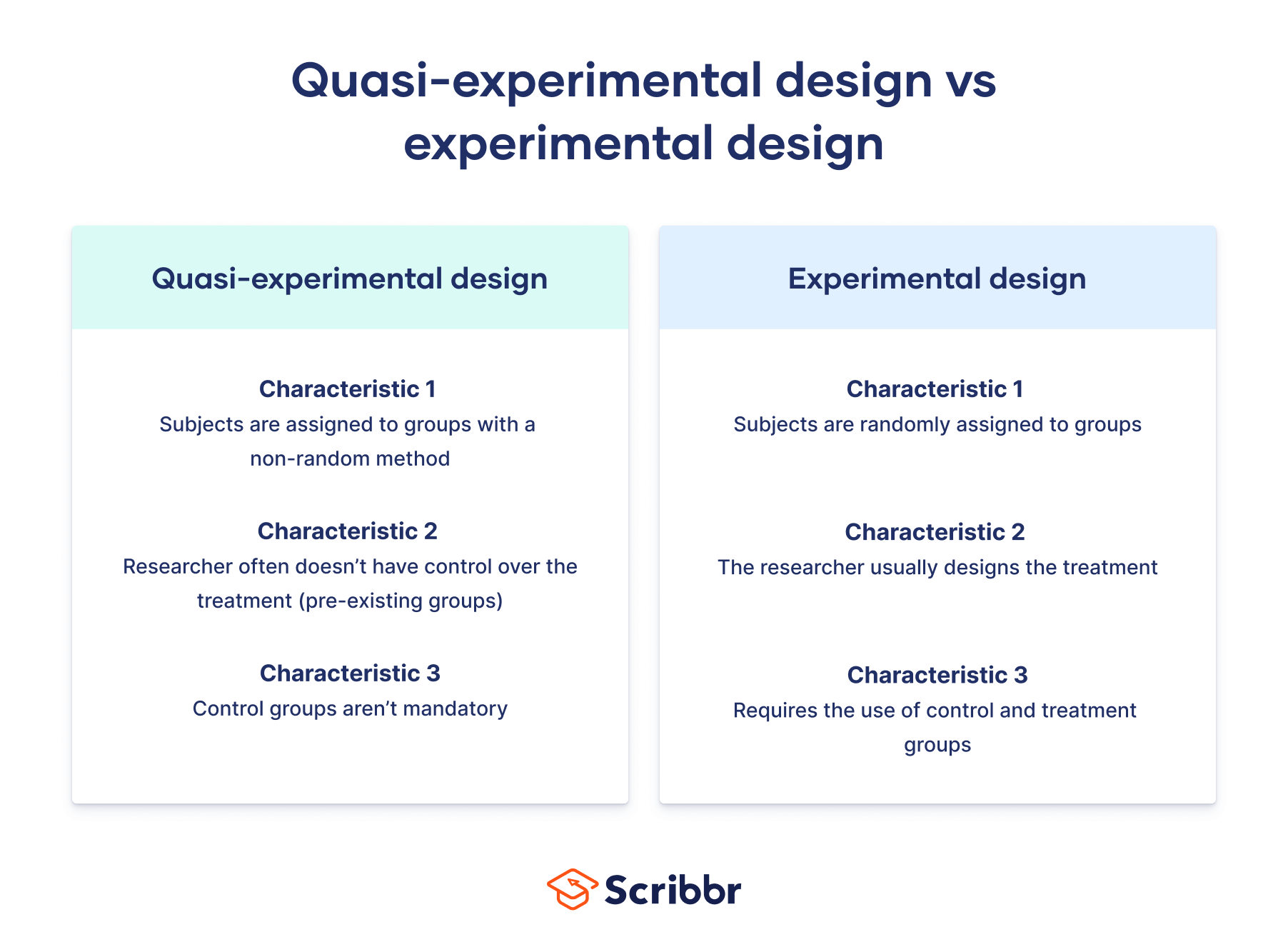

Quasi-Experimental vs. Experimental Design

It's essential to understand the distinctions between Quasi-Experimental and Experimental Design to appreciate the unique characteristics of each approach:

- Randomization: In Experimental Design, random assignment of participants to groups is a defining feature. Quasi-experimental design, on the other hand, lacks randomization due to practical constraints or ethical considerations.

- Control Groups : Experimental Design typically includes control groups that are subjected to no treatment or a placebo. The quasi-experimental design may have comparison groups but lacks the same level of control.

- Manipulation of IV: Experimental Design involves the intentional manipulation of the independent variable. Quasi-experimental design often deals with naturally occurring independent variables.

- Causal Inference: Experimental Design allows for stronger causal inferences due to randomization and control. Quasi-experimental design permits causal inferences but with some limitations.

When to Use Quasi-Experimental Design?

A quasi-experimental design is particularly valuable in several situations:

- Ethical Constraints: When manipulating the independent variable is ethically unacceptable or impractical, quasi-experimental design offers an alternative to studying naturally occurring variables.

- Real-World Settings: When researchers want to study phenomena in real-world contexts, quasi-experimental design allows them to do so without artificial laboratory settings.

- Limited Resources: In cases where resources are limited and conducting a controlled experiment is cost-prohibitive, quasi-experimental design can provide valuable insights.

- Policy and Program Evaluation: Quasi-experimental design is commonly used in evaluating the effectiveness of policies, interventions, or programs that cannot be randomly assigned to participants.

Importance of Quasi-Experimental Design in Research

Quasi-experimental design plays a vital role in research for several reasons:

- Addressing Real-World Complexities: It allows researchers to tackle complex real-world issues where controlled experiments are not feasible. This bridges the gap between controlled experiments and purely observational studies.

- Ethical Research: It provides an honest approach when manipulating variables or assigning treatments could harm participants or violate ethical standards.

- Policy and Practice Implications: Quasi-experimental studies generate findings with direct applications in policy-making and practical solutions in fields such as education, healthcare, and social sciences.

- Enhanced External Validity: Findings from Quasi-Experimental research often have high external validity, making them more applicable to broader populations and contexts.

By embracing the challenges and opportunities of quasi-experimental design, researchers can contribute valuable insights to their respective fields and drive positive changes in the real world.

Key Concepts in Quasi-Experimental Design

In quasi-experimental design, it's essential to grasp the fundamental concepts underpinning this research methodology. Let's explore these key concepts in detail.

Independent Variable

The independent variable (IV) is the factor you aim to study or manipulate in your research. Unlike controlled experiments, where you can directly manipulate the IV, quasi-experimental design often deals with naturally occurring variables. For example, if you're investigating the impact of a new teaching method on student performance, the teaching method is your independent variable.

Dependent Variable

The dependent variable (DV) is the outcome or response you measure to assess the effects of changes in the independent variable. Continuing with the teaching method example, the dependent variable would be the students' academic performance, typically measured using test scores, grades, or other relevant metrics.

Control Groups vs. Comparison Groups

While quasi-experimental design lacks the luxury of randomly assigning participants to control and experimental groups, you can still establish comparison groups to make meaningful inferences. Control groups consist of individuals who do not receive the treatment, while comparison groups are exposed to different levels or variations of the treatment. These groups help researchers gauge the effect of the independent variable.

Pre-Test and Post-Test Measures

In quasi-experimental design, it's common practice to collect data both before and after implementing the independent variable. The initial data (pre-test) serves as a baseline, allowing you to measure changes over time (post-test). This approach helps assess the impact of the independent variable more accurately. For instance, if you're studying the effectiveness of a new drug, you'd measure patients' health before administering the drug (pre-test) and afterward (post-test).

Threats to Internal Validity

Internal validity is crucial for establishing a cause-and-effect relationship between the independent and dependent variables. However, in a quasi-experimental design, several threats can compromise internal validity. These threats include:

- Selection Bias : When non-randomized groups differ systematically in ways that affect the study's outcome.

- History Effects: External events or changes over time that influence the results.

- Maturation Effects: Natural changes or developments that occur within participants during the study.

- Regression to the Mean: The tendency for extreme scores on a variable to move closer to the mean upon retesting.

- Attrition and Mortality: The loss of participants over time, potentially skewing the results.

- Testing Effects: The mere act of testing or assessing participants can impact their subsequent performance.

Understanding these threats is essential for designing and conducting Quasi-Experimental studies that yield valid and reliable results.

Randomization and Non-Randomization

In traditional experimental designs, randomization is a powerful tool for ensuring that groups are equivalent at the outset of a study. However, quasi-experimental design often involves non-randomization due to the nature of the research. This means that participants are not randomly assigned to treatment and control groups. Instead, researchers must employ various techniques to minimize biases and ensure that the groups are as similar as possible.

For example, if you are conducting a study on the effects of a new teaching method in a real classroom setting, you cannot randomly assign students to the treatment and control groups. Instead, you might use statistical methods to match students based on relevant characteristics such as prior academic performance or socioeconomic status. This matching process helps control for potential confounding variables, increasing the validity of your study.

Types of Quasi-Experimental Designs

In quasi-experimental design, researchers employ various approaches to investigate causal relationships and study the effects of independent variables when complete experimental control is challenging. Let's explore these types of quasi-experimental designs.

One-Group Posttest-Only Design

The One-Group Posttest-Only Design is one of the simplest forms of quasi-experimental design. In this design, a single group is exposed to the independent variable, and data is collected only after the intervention has taken place. Unlike controlled experiments, there is no comparison group. This design is useful when researchers cannot administer a pre-test or when it is logistically difficult to do so.

Example : Suppose you want to assess the effectiveness of a new time management seminar. You offer the seminar to a group of employees and measure their productivity levels immediately afterward to determine if there's an observable impact.

One-Group Pretest-Posttest Design

Similar to the One-Group Posttest-Only Design, this approach includes a pre-test measure in addition to the post-test. Researchers collect data both before and after the intervention. By comparing the pre-test and post-test results within the same group, you can gain a better understanding of the changes that occur due to the independent variable.

Example : If you're studying the impact of a stress management program on participants' stress levels, you would measure their stress levels before the program (pre-test) and after completing the program (post-test) to assess any changes.

Non-Equivalent Groups Design

The Non-Equivalent Groups Design involves multiple groups, but they are not randomly assigned. Instead, researchers must carefully match or control for relevant variables to minimize biases. This design is particularly useful when random assignment is not possible or ethical.

Example : Imagine you're examining the effectiveness of two teaching methods in two different schools. You can't randomly assign students to the schools, but you can carefully match them based on factors like age, prior academic performance, and socioeconomic status to create equivalent groups.

Time Series Design

Time Series Design is an approach where data is collected at multiple time points before and after the intervention. This design allows researchers to analyze trends and patterns over time, providing valuable insights into the sustained effects of the independent variable.

Example : If you're studying the impact of a new marketing campaign on product sales, you would collect sales data at regular intervals (e.g., monthly) before and after the campaign's launch to observe any long-term trends.

Regression Discontinuity Design

Regression Discontinuity Design is employed when participants are assigned to different groups based on a specific cutoff score or threshold. This design is often used in educational and policy research to assess the effects of interventions near a cutoff point.

Example : Suppose you're evaluating the impact of a scholarship program on students' academic performance. Students who score just above or below a certain GPA threshold are assigned differently to the program. This design helps assess the program's effectiveness at the cutoff point.

Propensity Score Matching

Propensity Score Matching is a technique used to create comparable treatment and control groups in non-randomized studies. Researchers calculate propensity scores based on participants' characteristics and match individuals in the treatment group to those in the control group with similar scores.

Example : If you're studying the effects of a new medication on patient outcomes, you would use propensity scores to match patients who received the medication with those who did not but have similar health profiles.

Interrupted Time Series Design

The Interrupted Time Series Design involves collecting data at multiple time points before and after the introduction of an intervention. However, in this design, the intervention occurs at a specific point in time, allowing researchers to assess its immediate impact.

Example : Let's say you're analyzing the effects of a new traffic management system on traffic accidents. You collect accident data before and after the system's implementation to observe any abrupt changes right after its introduction.

Each of these quasi-experimental designs offers unique advantages and is best suited to specific research questions and scenarios. Choosing the right design is crucial for conducting robust and informative studies.

Advantages and Disadvantages of Quasi-Experimental Design

Quasi-experimental design offers a valuable research approach, but like any methodology, it comes with its own set of advantages and disadvantages. Let's explore these in detail.

Quasi-Experimental Design Advantages

Quasi-experimental design presents several advantages that make it a valuable tool in research:

- Real-World Applicability: Quasi-experimental studies often take place in real-world settings, making the findings more applicable to practical situations. Researchers can examine the effects of interventions or variables in the context where they naturally occur.

- Ethical Considerations: In situations where manipulating the independent variable in a controlled experiment would be unethical, quasi-experimental design provides an ethical alternative. For example, it would be unethical to assign participants to smoke for a study on the health effects of smoking, but you can study naturally occurring groups of smokers and non-smokers.

- Cost-Efficiency: Conducting Quasi-Experimental research is often more cost-effective than conducting controlled experiments. The absence of controlled environments and extensive manipulations can save both time and resources.

These advantages make quasi-experimental design an attractive choice for researchers facing practical or ethical constraints in their studies.

Quasi-Experimental Design Disadvantages

However, quasi-experimental design also comes with its share of challenges and disadvantages:

- Limited Control: Unlike controlled experiments, where researchers have full control over variables, quasi-experimental design lacks the same level of control. This limited control can result in confounding variables that make it difficult to establish causality.

- Threats to Internal Validity: Various threats to internal validity, such as selection bias, history effects, and maturation effects, can compromise the accuracy of causal inferences. Researchers must carefully address these threats to ensure the validity of their findings.

- Causality Inference Challenges: Establishing causality can be challenging in quasi-experimental design due to the absence of randomization and control. While you can make strong arguments for causality, it may not be as conclusive as in controlled experiments.

- Potential Confounding Variables: In a quasi-experimental design, it's often challenging to control for all possible confounding variables that may affect the dependent variable. This can lead to uncertainty in attributing changes solely to the independent variable.

Despite these disadvantages, quasi-experimental design remains a valuable research tool when used judiciously and with a keen awareness of its limitations. Researchers should carefully consider their research questions and the practical constraints they face before choosing this approach.

How to Conduct a Quasi-Experimental Study?

Conducting a Quasi-Experimental study requires careful planning and execution to ensure the validity of your research. Let's dive into the essential steps you need to follow when conducting such a study.

1. Define Research Questions and Objectives

The first step in any research endeavor is clearly defining your research questions and objectives. This involves identifying the independent variable (IV) and the dependent variable (DV) you want to study. What is the specific relationship you want to explore, and what do you aim to achieve with your research?

- Specify Your Research Questions : Start by formulating precise research questions that your study aims to answer. These questions should be clear, focused, and relevant to your field of study.

- Identify the Independent Variable: Define the variable you intend to manipulate or study in your research. Understand its significance in your study's context.

- Determine the Dependent Variable: Identify the outcome or response variable that will be affected by changes in the independent variable.

- Establish Hypotheses (If Applicable): If you have specific hypotheses about the relationship between the IV and DV, state them clearly. Hypotheses provide a framework for testing your research questions.

2. Select the Appropriate Quasi-Experimental Design

Choosing the right quasi-experimental design is crucial for achieving your research objectives. Select a design that aligns with your research questions and the available data. Consider factors such as the feasibility of implementing the design and the ethical considerations involved.

- Evaluate Your Research Goals: Assess your research questions and objectives to determine which type of quasi-experimental design is most suitable. Each design has its strengths and limitations, so choose one that aligns with your goals.

- Consider Ethical Constraints: Take into account any ethical concerns related to your research. Depending on your study's context, some designs may be more ethically sound than others.

- Assess Data Availability: Ensure you have access to the necessary data for your chosen design. Some designs may require extensive historical data, while others may rely on data collected during the study.

3. Identify and Recruit Participants

Selecting the right participants is a critical aspect of Quasi-Experimental research. The participants should represent the population you want to make inferences about, and you must address ethical considerations, including informed consent.

- Define Your Target Population: Determine the population that your study aims to generalize to. Your sample should be representative of this population.

- Recruitment Process: Develop a plan for recruiting participants. Depending on your design, you may need to reach out to specific groups or institutions.

- Informed Consent: Ensure that you obtain informed consent from participants. Clearly explain the nature of the study, potential risks, and their rights as participants.

4. Collect Data

Data collection is a crucial step in Quasi-Experimental research. You must adhere to a consistent and systematic process to gather relevant information before and after the intervention or treatment.

- Pre-Test Measures: If applicable, collect data before introducing the independent variable. Ensure that the pre-test measures are standardized and reliable.

- Post-Test Measures: After the intervention, collect post-test data using the same measures as the pre-test. This allows you to assess changes over time.

- Maintain Data Consistency: Ensure that data collection procedures are consistent across all participants and time points to minimize biases.

5. Analyze Data

Once you've collected your data, it's time to analyze it using appropriate statistical techniques . The choice of analysis depends on your research questions and the type of data you've gathered.

- Statistical Analysis : Use statistical software to analyze your data. Common techniques include t-tests , analysis of variance (ANOVA) , regression analysis , and more, depending on the design and variables.

- Control for Confounding Variables: Be aware of potential confounding variables and include them in your analysis as covariates to ensure accurate results.

Chi-Square Calculator :

t-Test Calculator :

6. Interpret Results

With the analysis complete, you can interpret the results to draw meaningful conclusions about the relationship between the independent and dependent variables.

- Examine Effect Sizes: Assess the magnitude of the observed effects to determine their practical significance.

- Consider Significance Levels: Determine whether the observed results are statistically significant . Understand the p-values and their implications.

- Compare Findings to Hypotheses: Evaluate whether your findings support or reject your hypotheses and research questions.

7. Draw Conclusions

Based on your analysis and interpretation of the results, draw conclusions about the research questions and objectives you set out to address.

- Causal Inferences: Discuss the extent to which your study allows for causal inferences. Be transparent about the limitations and potential alternative explanations for your findings.

- Implications and Applications: Consider the practical implications of your research. How do your findings contribute to existing knowledge, and how can they be applied in real-world contexts?

- Future Research: Identify areas for future research and potential improvements in study design. Highlight any limitations or constraints that may have affected your study's outcomes.

By following these steps meticulously, you can conduct a rigorous and informative Quasi-Experimental study that advances knowledge in your field of research.

Quasi-Experimental Design Examples

Quasi-experimental design finds applications in a wide range of research domains, including business-related and market research scenarios. Below, we delve into some detailed examples of how this research methodology is employed in practice:

Example 1: Assessing the Impact of a New Marketing Strategy

Suppose a company wants to evaluate the effectiveness of a new marketing strategy aimed at boosting sales. Conducting a controlled experiment may not be feasible due to the company's existing customer base and the challenge of randomly assigning customers to different marketing approaches. In this scenario, a quasi-experimental design can be employed.

- Independent Variable: The new marketing strategy.

- Dependent Variable: Sales revenue.

- Design: The company could implement the new strategy for one group of customers while maintaining the existing strategy for another group. Both groups are selected based on similar demographics and purchase history , reducing selection bias. Pre-implementation data (sales records) can serve as the baseline, and post-implementation data can be collected to assess the strategy's impact.

Example 2: Evaluating the Effectiveness of Employee Training Programs

In the context of human resources and employee development, organizations often seek to evaluate the impact of training programs. A randomized controlled trial (RCT) with random assignment may not be practical or ethical, as some employees may need specific training more than others. Instead, a quasi-experimental design can be employed.

- Independent Variable: Employee training programs.

- Dependent Variable: Employee performance metrics, such as productivity or quality of work.

- Design: The organization can offer training programs to employees who express interest or demonstrate specific needs, creating a self-selected treatment group. A comparable control group can consist of employees with similar job roles and qualifications who did not receive the training. Pre-training performance metrics can serve as the baseline, and post-training data can be collected to assess the impact of the training programs.

Example 3: Analyzing the Effects of a Tax Policy Change

In economics and public policy, researchers often examine the effects of tax policy changes on economic behavior. Conducting a controlled experiment in such cases is practically impossible. Therefore, a quasi-experimental design is commonly employed.

- Independent Variable: Tax policy changes (e.g., tax rate adjustments).

- Dependent Variable: Economic indicators, such as consumer spending or business investments.

- Design: Researchers can analyze data from different regions or jurisdictions where tax policy changes have been implemented. One region could represent the treatment group (with tax policy changes), while a similar region with no tax policy changes serves as the control group. By comparing economic data before and after the policy change in both groups, researchers can assess the impact of the tax policy changes.

These examples illustrate how quasi-experimental design can be applied in various research contexts, providing valuable insights into the effects of independent variables in real-world scenarios where controlled experiments are not feasible or ethical. By carefully selecting comparison groups and controlling for potential biases, researchers can draw meaningful conclusions and inform decision-making processes.

How to Publish Quasi-Experimental Research?

Publishing your Quasi-Experimental research findings is a crucial step in contributing to the academic community's knowledge. We'll explore the essential aspects of reporting and publishing your Quasi-Experimental research effectively.

Structuring Your Research Paper

When preparing your research paper, it's essential to adhere to a well-structured format to ensure clarity and comprehensibility. Here are key elements to include:

Title and Abstract

- Title: Craft a concise and informative title that reflects the essence of your study. It should capture the main research question or hypothesis.

- Abstract: Summarize your research in a structured abstract, including the purpose, methods, results, and conclusions. Ensure it provides a clear overview of your study.

Introduction

- Background and Rationale: Provide context for your study by discussing the research gap or problem your study addresses. Explain why your research is relevant and essential.

- Research Questions or Hypotheses: Clearly state your research questions or hypotheses and their significance.

Literature Review

- Review of Related Work: Discuss relevant literature that supports your research. Highlight studies with similar methodologies or findings and explain how your research fits within this context.

- Participants: Describe your study's participants, including their characteristics and how you recruited them.

- Quasi-Experimental Design: Explain your chosen design in detail, including the independent and dependent variables, procedures, and any control measures taken.

- Data Collection: Detail the data collection methods , instruments used, and any pre-test or post-test measures.

- Data Analysis: Describe the statistical techniques employed, including any control for confounding variables.

- Presentation of Findings: Present your results clearly, using tables, graphs, and descriptive statistics where appropriate. Include p-values and effect sizes, if applicable.

- Interpretation of Results: Discuss the implications of your findings and how they relate to your research questions or hypotheses.

- Interpretation and Implications: Analyze your results in the context of existing literature and theories. Discuss the practical implications of your findings.

- Limitations: Address the limitations of your study, including potential biases or threats to internal validity.

- Future Research: Suggest areas for future research and how your study contributes to the field.

Ethical Considerations in Reporting

Ethical reporting is paramount in Quasi-Experimental research. Ensure that you adhere to ethical standards, including:

- Informed Consent: Clearly state that informed consent was obtained from all participants, and describe the informed consent process.

- Protection of Participants: Explain how you protected the rights and well-being of your participants throughout the study.

- Confidentiality: Detail how you maintained privacy and anonymity, especially when presenting individual data.

- Disclosure of Conflicts of Interest: Declare any potential conflicts of interest that could influence the interpretation of your findings.

Common Pitfalls to Avoid

When reporting your Quasi-Experimental research, watch out for common pitfalls that can diminish the quality and impact of your work:

- Overgeneralization: Be cautious not to overgeneralize your findings. Clearly state the limits of your study and the populations to which your results can be applied.

- Misinterpretation of Causality: Clearly articulate the limitations in inferring causality in Quasi-Experimental research. Avoid making strong causal claims unless supported by solid evidence.

- Ignoring Ethical Concerns: Ethical considerations are paramount. Failing to report on informed consent, ethical oversight, and participant protection can undermine the credibility of your study.

Guidelines for Transparent Reporting

To enhance the transparency and reproducibility of your Quasi-Experimental research, consider adhering to established reporting guidelines, such as:

- CONSORT Statement: If your study involves interventions or treatments, follow the CONSORT guidelines for transparent reporting of randomized controlled trials.

- STROBE Statement: For observational studies, the STROBE statement provides guidance on reporting essential elements.

- PRISMA Statement: If your research involves systematic reviews or meta-analyses, adhere to the PRISMA guidelines.

- Transparent Reporting of Evaluations with Non-Randomized Designs (TREND): TREND guidelines offer specific recommendations for transparently reporting non-randomized designs, including Quasi-Experimental research.

By following these reporting guidelines and maintaining the highest ethical standards, you can contribute to the advancement of knowledge in your field and ensure the credibility and impact of your Quasi-Experimental research findings.

Quasi-Experimental Design Challenges

Conducting a Quasi-Experimental study can be fraught with challenges that may impact the validity and reliability of your findings. We'll take a look at some common challenges and provide strategies on how you can address them effectively.

Selection Bias

Challenge: Selection bias occurs when non-randomized groups differ systematically in ways that affect the study's outcome. This bias can undermine the validity of your research, as it implies that the groups are not equivalent at the outset of the study.

Addressing Selection Bias:

- Matching: Employ matching techniques to create comparable treatment and control groups. Match participants based on relevant characteristics, such as age, gender, or prior performance, to balance the groups.

- Statistical Controls: Use statistical controls to account for differences between groups. Include covariates in your analysis to adjust for potential biases.

- Sensitivity Analysis: Conduct sensitivity analyses to assess how vulnerable your results are to selection bias. Explore different scenarios to understand the impact of potential bias on your conclusions.

History Effects

Challenge: History effects refer to external events or changes over time that influence the study's results. These external factors can confound your research by introducing variables you did not account for.

Addressing History Effects:

- Collect Historical Data: Gather extensive historical data to understand trends and patterns that might affect your study. By having a comprehensive historical context, you can better identify and account for historical effects.

- Control Groups: Include control groups whenever possible. By comparing the treatment group's results to those of a control group, you can account for external influences that affect both groups equally.

- Time Series Analysis : If applicable, use time series analysis to detect and account for temporal trends. This method helps differentiate between the effects of the independent variable and external events.

Maturation Effects

Challenge: Maturation effects occur when participants naturally change or develop throughout the study, independent of the intervention. These changes can confound your results, making it challenging to attribute observed effects solely to the independent variable.

Addressing Maturation Effects:

- Randomization: If possible, use randomization to distribute maturation effects evenly across treatment and control groups. Random assignment minimizes the impact of maturation as a confounding variable.

- Matched Pairs: If randomization is not feasible, employ matched pairs or statistical controls to ensure that both groups experience similar maturation effects.

- Shorter Time Frames: Limit the duration of your study to reduce the likelihood of significant maturation effects. Shorter studies are less susceptible to long-term maturation.

Regression to the Mean

Challenge: Regression to the mean is the tendency for extreme scores on a variable to move closer to the mean upon retesting. This can create the illusion of an intervention's effectiveness when, in reality, it's a natural statistical phenomenon.

Addressing Regression to the Mean:

- Use Control Groups: Include control groups in your study to provide a baseline for comparison. This helps differentiate genuine intervention effects from regression to the mean.

- Multiple Data Points: Collect numerous data points to identify patterns and trends. If extreme scores regress to the mean in subsequent measurements, it may be indicative of regression to the mean rather than a true intervention effect.

- Statistical Analysis: Employ statistical techniques that account for regression to the mean when analyzing your data. Techniques like analysis of covariance (ANCOVA) can help control for baseline differences.

Attrition and Mortality

Challenge: Attrition refers to the loss of participants over the course of your study, while mortality is the permanent loss of participants. High attrition rates can introduce biases and affect the representativeness of your sample.

Addressing Attrition and Mortality:

- Careful Participant Selection: Select participants who are likely to remain engaged throughout the study. Consider factors that may lead to attrition, such as participant motivation and commitment.

- Incentives: Provide incentives or compensation to participants to encourage their continued participation.

- Follow-Up Strategies: Implement effective follow-up strategies to reduce attrition. Regular communication and reminders can help keep participants engaged.

- Sensitivity Analysis: Conduct sensitivity analyses to assess the impact of attrition and mortality on your results. Compare the characteristics of participants who dropped out with those who completed the study.

Testing Effects

Challenge: Testing effects occur when the mere act of testing or assessing participants affects their subsequent performance. This phenomenon can lead to changes in the dependent variable that are unrelated to the independent variable.

Addressing Testing Effects:

- Counterbalance Testing: If possible, counterbalance the order of tests or assessments between treatment and control groups. This helps distribute the testing effects evenly across groups.

- Control Groups: Include control groups subjected to the same testing or assessment procedures as the treatment group. By comparing the two groups, you can determine whether testing effects have influenced the results.

- Minimize Testing Frequency: Limit the frequency of testing or assessments to reduce the likelihood of testing effects. Conducting fewer assessments can mitigate the impact of repeated testing on participants.

By proactively addressing these common challenges, you can enhance the validity and reliability of your Quasi-Experimental study, making your findings more robust and trustworthy.

Conclusion for Quasi-Expermental Design

Quasi-experimental design is a powerful tool that helps researchers investigate cause-and-effect relationships in real-world situations where strict control is not always possible. By understanding the key concepts, types of designs, and how to address challenges, you can conduct robust research and contribute valuable insights to your field. Remember, quasi-experimental design bridges the gap between controlled experiments and purely observational studies, making it an essential approach in various fields, from business and market research to public policy and beyond. So, whether you're a researcher, student, or decision-maker, the knowledge of quasi-experimental design empowers you to make informed choices and drive positive changes in the world.

How to Supercharge Quasi-Experimental Design with Real-Time Insights?

Introducing Appinio , the real-time market research platform that transforms the world of quasi-experimental design. Imagine having the power to conduct your own market research in minutes, obtaining actionable insights that fuel your data-driven decisions. Appinio takes care of the research and tech complexities, freeing you to focus on what truly matters for your business.

Here's why Appinio stands out:

- Lightning-Fast Insights: From formulating questions to uncovering insights, Appinio delivers results in minutes, ensuring you get the answers you need when you need them.

- No Research Degree Required: Our intuitive platform is designed for everyone, eliminating the need for a PhD in research. Anyone can dive in and start harnessing the power of real-time consumer insights.

- Global Reach, Local Expertise: With access to over 90 countries and the ability to define precise target groups based on 1200+ characteristics, you can conduct Quasi-Experimental research on a global scale while maintaining a local touch.

Get free access to the platform!

Get facts and figures 🧠

Want to see more data insights? Our free reports are just the right thing for you!

Wait, there's more

04.11.2024 | 5min read

Trustly uses Appinio’s insights to revolutionize utility bill payments

19.09.2024 | 9min read

Track Your Customer Retention & Brand Metrics for Post-Holiday Success

16.09.2024 | 10min read

Creative Checkup – Optimize Advertising Slogans & Creatives for ROI

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case AskWhy Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research Research Tools and Apps

Quasi-experimental Research: What It Is, Types & Examples

Much like an actual experiment, quasi-experimental research tries to demonstrate a cause-and-effect link between a dependent and an independent variable. A quasi-experiment, on the other hand, does not depend on random assignment, unlike an actual experiment. The subjects are sorted into groups based on non-random variables.

What is Quasi-Experimental Research?

“Resemblance” is the definition of “quasi.” Individuals are not randomly allocated to conditions or orders of conditions, even though the regression analysis is changed. As a result, quasi-experimental research is research that appears to be experimental but is not.

The directionality problem is avoided in quasi-experimental research since the regression analysis is altered before the multiple regression is assessed. However, because individuals are not randomized at random, there are likely to be additional disparities across conditions in quasi-experimental research.

As a result, in terms of internal consistency, quasi-experiments fall somewhere between correlational research and actual experiments.

The key component of a true experiment is randomly allocated groups. This means that each person has an equivalent chance of being assigned to the experimental group or the control group, depending on whether they are manipulated or not.

Simply put, a quasi-experiment is not a real experiment. A quasi-experiment does not feature randomly allocated groups since the main component of a real experiment is randomly assigned groups. Why is it so crucial to have randomly allocated groups, given that they constitute the only distinction between quasi-experimental and actual experimental research ?

Let’s use an example to illustrate our point. Let’s assume we want to discover how new psychological therapy affects depressed patients. In a genuine trial, you’d split half of the psych ward into treatment groups, With half getting the new psychotherapy therapy and the other half receiving standard depression treatment .

And the physicians compare the outcomes of this treatment to the results of standard treatments to see if this treatment is more effective. Doctors, on the other hand, are unlikely to agree with this genuine experiment since they believe it is unethical to treat one group while leaving another untreated.

A quasi-experimental study will be useful in this case. Instead of allocating these patients at random, you uncover pre-existing psychotherapist groups in the hospitals. Clearly, there’ll be counselors who are eager to undertake these trials as well as others who prefer to stick to the old ways.

These pre-existing groups can be used to compare the symptom development of individuals who received the novel therapy with those who received the normal course of treatment, even though the groups weren’t chosen at random.

If any substantial variations between them can be well explained, you may be very assured that any differences are attributable to the treatment but not to other extraneous variables.

As we mentioned before, quasi-experimental research entails manipulating an independent variable by randomly assigning people to conditions or sequences of conditions. Non-equivalent group designs, pretest-posttest designs, and regression discontinuity designs are only a few of the essential types.

What are quasi-experimental research designs?

Quasi-experimental research designs are a type of research design that is similar to experimental designs but doesn’t give full control over the independent variable(s) like true experimental designs do.

In a quasi-experimental design, the researcher changes or watches an independent variable, but the participants are not put into groups at random. Instead, people are put into groups based on things they already have in common, like their age, gender, or how many times they have seen a certain stimulus.

Because the assignments are not random, it is harder to draw conclusions about cause and effect than in a real experiment. However, quasi-experimental designs are still useful when randomization is not possible or ethical.

The true experimental design may be impossible to accomplish or just too expensive, especially for researchers with few resources. Quasi-experimental designs enable you to investigate an issue by utilizing data that has already been paid for or gathered by others (often the government).

Because they allow better control for confounding variables than other forms of studies, they have higher external validity than most genuine experiments and higher internal validity (less than true experiments) than other non-experimental research.

Is quasi-experimental research quantitative or qualitative?

Quasi-experimental research is a quantitative research method. It involves numerical data collection and statistical analysis. Quasi-experimental research compares groups with different circumstances or treatments to find cause-and-effect links.

It draws statistical conclusions from quantitative data. Qualitative data can enhance quasi-experimental research by revealing participants’ experiences and opinions, but quantitative data is the method’s foundation.

Quasi-experimental research types

There are many different sorts of quasi-experimental designs. Three of the most popular varieties are described below: Design of non-equivalent groups, Discontinuity in regression, and Natural experiments.

Design of Non-equivalent Groups

Example: design of non-equivalent groups, discontinuity in regression, example: discontinuity in regression, natural experiments, example: natural experiments.

However, because they couldn’t afford to pay everyone who qualified for the program, they had to use a random lottery to distribute slots.

Experts were able to investigate the program’s impact by utilizing enrolled people as a treatment group and those who were qualified but did not play the jackpot as an experimental group.

How QuestionPro helps in quasi-experimental research?

QuestionPro can be a useful tool in quasi-experimental research because it includes features that can assist you in designing and analyzing your research study. Here are some ways in which QuestionPro can help in quasi-experimental research:

Design surveys

Randomize participants, collect data over time, analyze data, collaborate with your team.

With QuestionPro, you have access to the most mature market research platform and tool that helps you collect and analyze the insights that matter the most. By leveraging InsightsHub, the unified hub for data management, you can leverage the consolidated platform to organize, explore, search, and discover your research data in one organized data repository .

Optimize Your quasi-experimental research with QuestionPro. Get started now!

LEARN MORE FREE TRIAL

MORE LIKE THIS

The Impact Of Synthetic Data On Modern Research

Dec 19, 2024

Companies are losing $ billions with gaps in market research – are you?

Dec 18, 2024

CultureAmp vs Qualtrics: The Best Employee Experience Platform

Dec 16, 2024

Data Quality Dimensions: What are They & How to Improve

Dec 10, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Tuesday CX Thoughts (TCXT)

- Uncategorized

- What’s Coming Up

- Workforce Intelligence

- Privacy Policy

Home » Quasi-Experimental Research Design – Types, Methods

Quasi-Experimental Research Design – Types, Methods

Table of Contents

Quasi-experimental research design is a widely used methodology in social sciences, education, healthcare, and other fields to evaluate the impact of an intervention or treatment. Unlike true experimental designs, quasi-experiments lack random assignment, which can limit control over external factors but still offer valuable insights into cause-and-effect relationships.

This article delves into the concept of quasi-experimental research, explores its types, methods, and applications, and discusses its strengths and limitations.

Quasi-Experimental Design

Quasi-experimental research design is a type of empirical study used to estimate the causal relationship between an intervention and its outcomes. It resembles an experimental design but does not involve random assignment of participants to groups. Instead, groups are pre-existing or assigned based on non-random criteria, such as location, demographic characteristics, or convenience.

For example, a school might implement a new teaching method in one class while another class continues with the traditional approach. Researchers can then compare the outcomes to assess the effectiveness of the new method.

Key Characteristics of Quasi-Experimental Research

- No Random Assignment: Participants are not randomly assigned to experimental or control groups.

- Comparison Groups: Often involves comparing a treatment group to a non-equivalent control group.

- Real-World Settings: Frequently conducted in natural environments, such as schools, hospitals, or workplaces.

- Causal Inference: Aims to identify causal relationships, though less robustly than true experiments.

Purpose of Quasi-Experimental Research

- To evaluate interventions or treatments when randomization is impractical or unethical.