Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

8.2 Multiple Independent Variables

Learning objectives.

- Explain why researchers often include multiple independent variables in their studies.

- Define factorial design, and use a factorial design table to represent and interpret simple factorial designs.

- Distinguish between main effects and interactions, and recognize and give examples of each.

- Sketch and interpret bar graphs and line graphs showing the results of studies with simple factorial designs.

Just as it is common for studies in psychology to include multiple dependent variables, it is also common for them to include multiple independent variables. Schnall and her colleagues studied the effect of both disgust and private body consciousness in the same study. Researchers’ inclusion of multiple independent variables in one experiment is further illustrated by the following actual titles from various professional journals:

- The Effects of Temporal Delay and Orientation on Haptic Object Recognition

- Opening Closed Minds: The Combined Effects of Intergroup Contact and Need for Closure on Prejudice

- Effects of Expectancies and Coping on Pain-Induced Intentions to Smoke

- The Effect of Age and Divided Attention on Spontaneous Recognition

- The Effects of Reduced Food Size and Package Size on the Consumption Behavior of Restrained and Unrestrained Eaters

Just as including multiple dependent variables in the same experiment allows one to answer more research questions, so too does including multiple independent variables in the same experiment. For example, instead of conducting one study on the effect of disgust on moral judgment and another on the effect of private body consciousness on moral judgment, Schnall and colleagues were able to conduct one study that addressed both questions. But including multiple independent variables also allows the researcher to answer questions about whether the effect of one independent variable depends on the level of another. This is referred to as an interaction between the independent variables. Schnall and her colleagues, for example, observed an interaction between disgust and private body consciousness because the effect of disgust depended on whether participants were high or low in private body consciousness. As we will see, interactions are often among the most interesting results in psychological research.

Factorial Designs

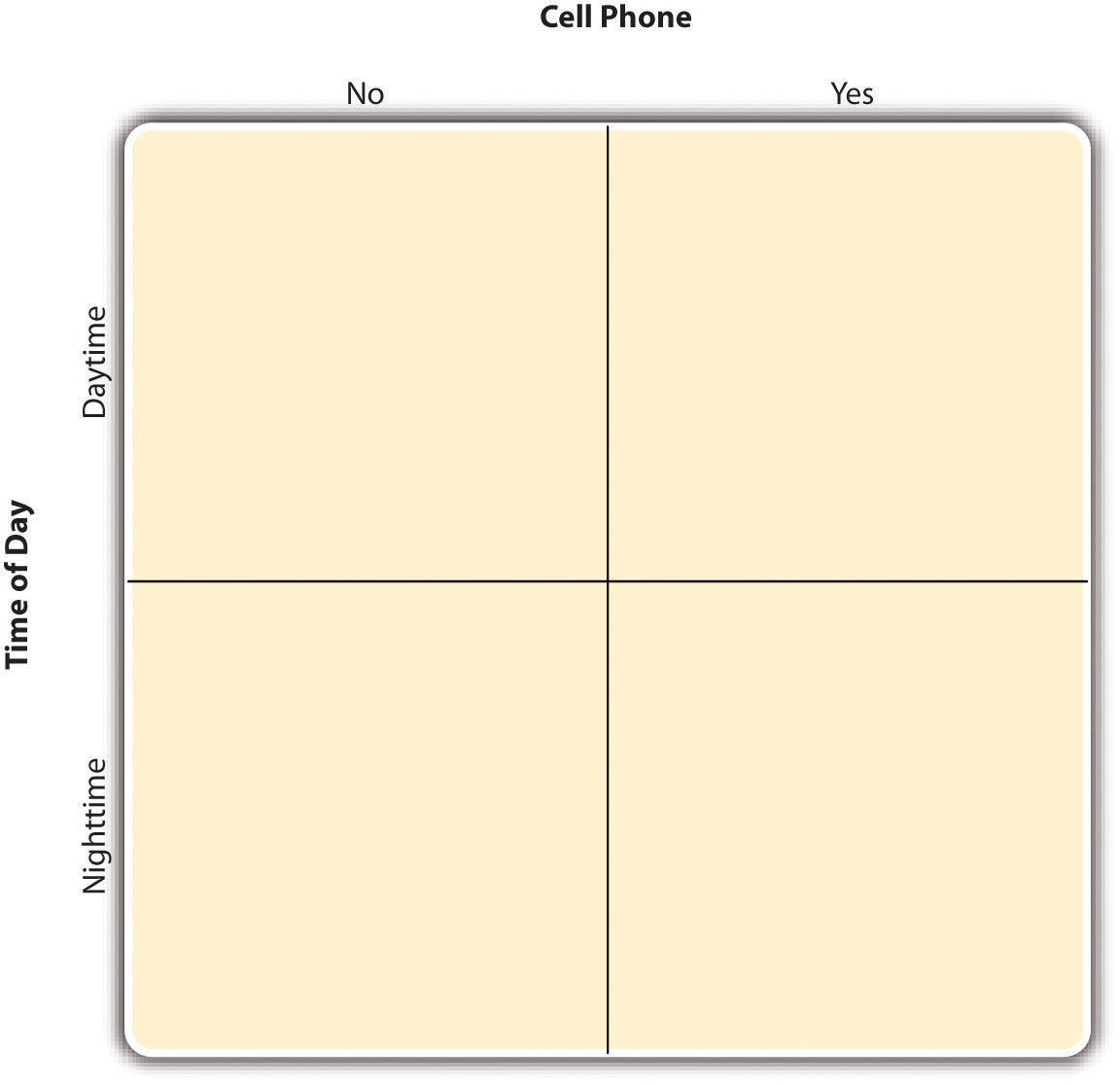

By far the most common approach to including multiple independent variables in an experiment is the factorial design. In a factorial design , each level of one independent variable (which can also be called a factor ) is combined with each level of the others to produce all possible combinations. Each combination, then, becomes a condition in the experiment. Imagine, for example, an experiment on the effect of cell phone use (yes vs. no) and time of day (day vs. night) on driving ability. This is shown in the factorial design table in Figure 8.2 “Factorial Design Table Representing a 2 × 2 Factorial Design” . The columns of the table represent cell phone use, and the rows represent time of day. The four cells of the table represent the four possible combinations or conditions: using a cell phone during the day, not using a cell phone during the day, using a cell phone at night, and not using a cell phone at night. This particular design is a 2 × 2 (read “two-by-two”) factorial design because it combines two variables, each of which has two levels. If one of the independent variables had a third level (e.g., using a handheld cell phone, using a hands-free cell phone, and not using a cell phone), then it would be a 3 × 2 factorial design, and there would be six distinct conditions. Notice that the number of possible conditions is the product of the numbers of levels. A 2 × 2 factorial design has four conditions, a 3 × 2 factorial design has six conditions, a 4 × 5 factorial design would have 20 conditions, and so on.

Figure 8.2 Factorial Design Table Representing a 2 × 2 Factorial Design

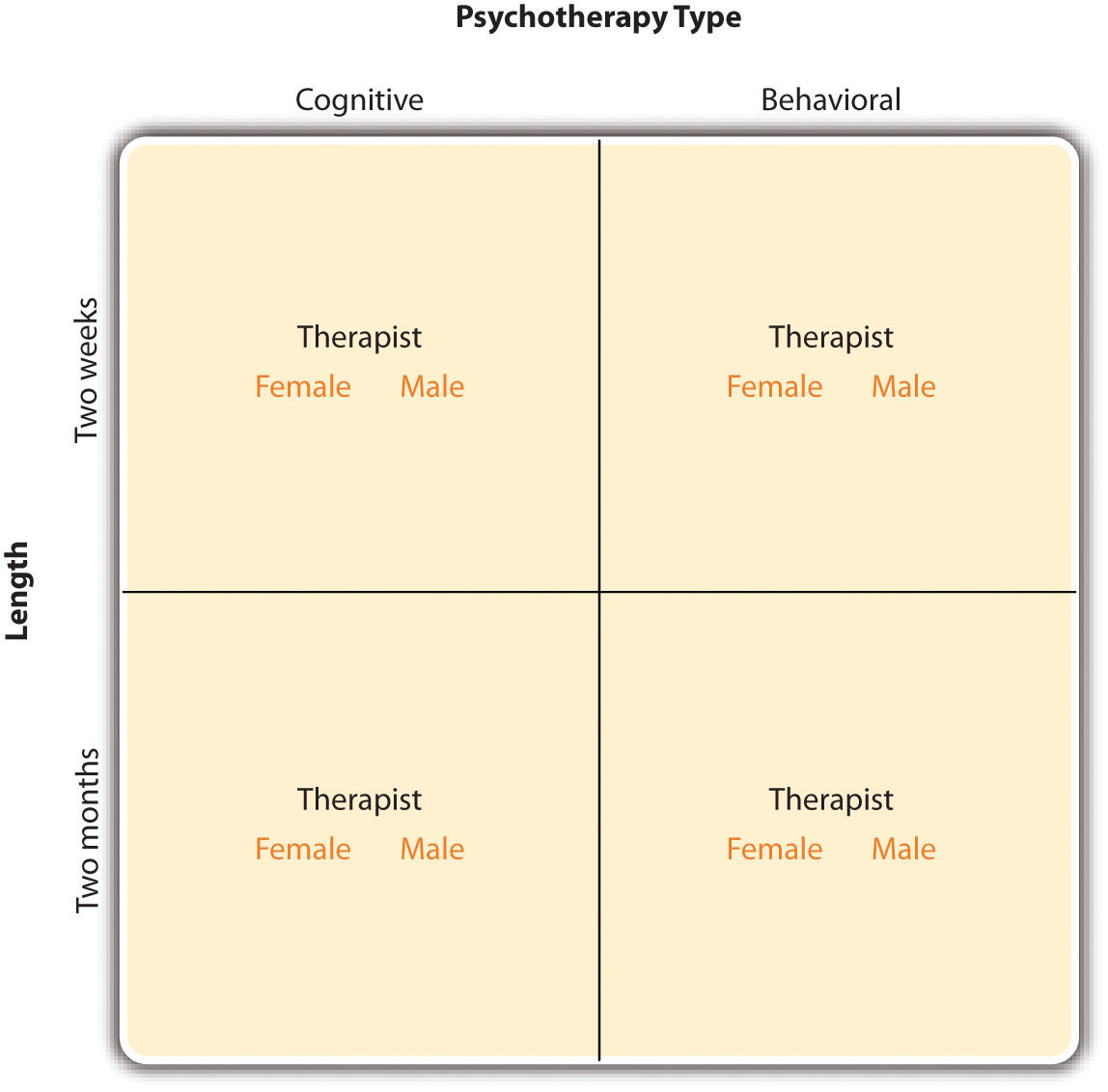

In principle, factorial designs can include any number of independent variables with any number of levels. For example, an experiment could include the type of psychotherapy (cognitive vs. behavioral), the length of the psychotherapy (2 weeks vs. 2 months), and the sex of the psychotherapist (female vs. male). This would be a 2 × 2 × 2 factorial design and would have eight conditions. Figure 8.3 “Factorial Design Table Representing a 2 × 2 × 2 Factorial Design” shows one way to represent this design. In practice, it is unusual for there to be more than three independent variables with more than two or three levels each because the number of conditions can quickly become unmanageable. For example, adding a fourth independent variable with three levels (e.g., therapist experience: low vs. medium vs. high) to the current example would make it a 2 × 2 × 2 × 3 factorial design with 24 distinct conditions. In the rest of this section, we will focus on designs with two independent variables. The general principles discussed here extend in a straightforward way to more complex factorial designs.

Figure 8.3 Factorial Design Table Representing a 2 × 2 × 2 Factorial Design

Assigning Participants to Conditions

Recall that in a simple between-subjects design, each participant is tested in only one condition. In a simple within-subjects design, each participant is tested in all conditions. In a factorial experiment, the decision to take the between-subjects or within-subjects approach must be made separately for each independent variable. In a between-subjects factorial design , all of the independent variables are manipulated between subjects. For example, all participants could be tested either while using a cell phone or while not using a cell phone and either during the day or during the night. This would mean that each participant was tested in one and only one condition. In a within-subjects factorial design , all of the independent variables are manipulated within subjects. All participants could be tested both while using a cell phone and while not using a cell phone and both during the day and during the night. This would mean that each participant was tested in all conditions. The advantages and disadvantages of these two approaches are the same as those discussed in Chapter 6 “Experimental Research” . The between-subjects design is conceptually simpler, avoids carryover effects, and minimizes the time and effort of each participant. The within-subjects design is more efficient for the researcher and controls extraneous participant variables.

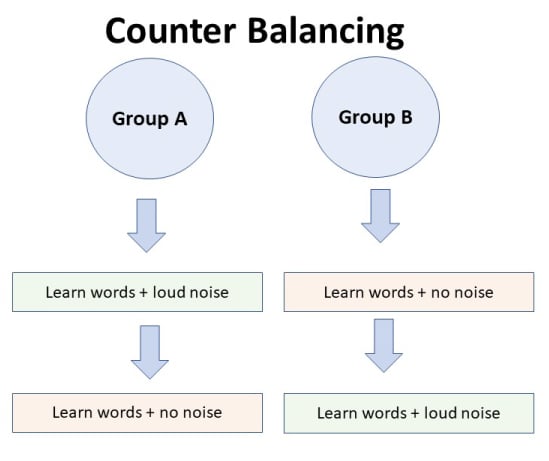

It is also possible to manipulate one independent variable between subjects and another within subjects. This is called a mixed factorial design . For example, a researcher might choose to treat cell phone use as a within-subjects factor by testing the same participants both while using a cell phone and while not using a cell phone (while counterbalancing the order of these two conditions). But he or she might choose to treat time of day as a between-subjects factor by testing each participant either during the day or during the night (perhaps because this only requires them to come in for testing once). Thus each participant in this mixed design would be tested in two of the four conditions.

Regardless of whether the design is between subjects, within subjects, or mixed, the actual assignment of participants to conditions or orders of conditions is typically done randomly.

Nonmanipulated Independent Variables

In many factorial designs, one of the independent variables is a nonmanipulated independent variable . The researcher measures it but does not manipulate it. The study by Schnall and colleagues is a good example. One independent variable was disgust, which the researchers manipulated by testing participants in a clean room or a messy room. The other was private body consciousness, which the researchers simply measured. Another example is a study by Halle Brown and colleagues in which participants were exposed to several words that they were later asked to recall (Brown, Kosslyn, Delamater, Fama, & Barsky, 1999). The manipulated independent variable was the type of word. Some were negative health-related words (e.g., tumor , coronary ), and others were not health related (e.g., election , geometry ). The nonmanipulated independent variable was whether participants were high or low in hypochondriasis (excessive concern with ordinary bodily symptoms). The result of this study was that the participants high in hypochondriasis were better than those low in hypochondriasis at recalling the health-related words, but they were no better at recalling the non-health-related words.

Such studies are extremely common, and there are several points worth making about them. First, nonmanipulated independent variables are usually participant variables (private body consciousness, hypochondriasis, self-esteem, and so on), and as such they are by definition between-subjects factors. For example, people are either low in hypochondriasis or high in hypochondriasis; they cannot be tested in both of these conditions. Second, such studies are generally considered to be experiments as long as at least one independent variable is manipulated, regardless of how many nonmanipulated independent variables are included. Third, it is important to remember that causal conclusions can only be drawn about the manipulated independent variable. For example, Schnall and her colleagues were justified in concluding that disgust affected the harshness of their participants’ moral judgments because they manipulated that variable and randomly assigned participants to the clean or messy room. But they would not have been justified in concluding that participants’ private body consciousness affected the harshness of their participants’ moral judgments because they did not manipulate that variable. It could be, for example, that having a strict moral code and a heightened awareness of one’s body are both caused by some third variable (e.g., neuroticism). Thus it is important to be aware of which variables in a study are manipulated and which are not.

Graphing the Results of Factorial Experiments

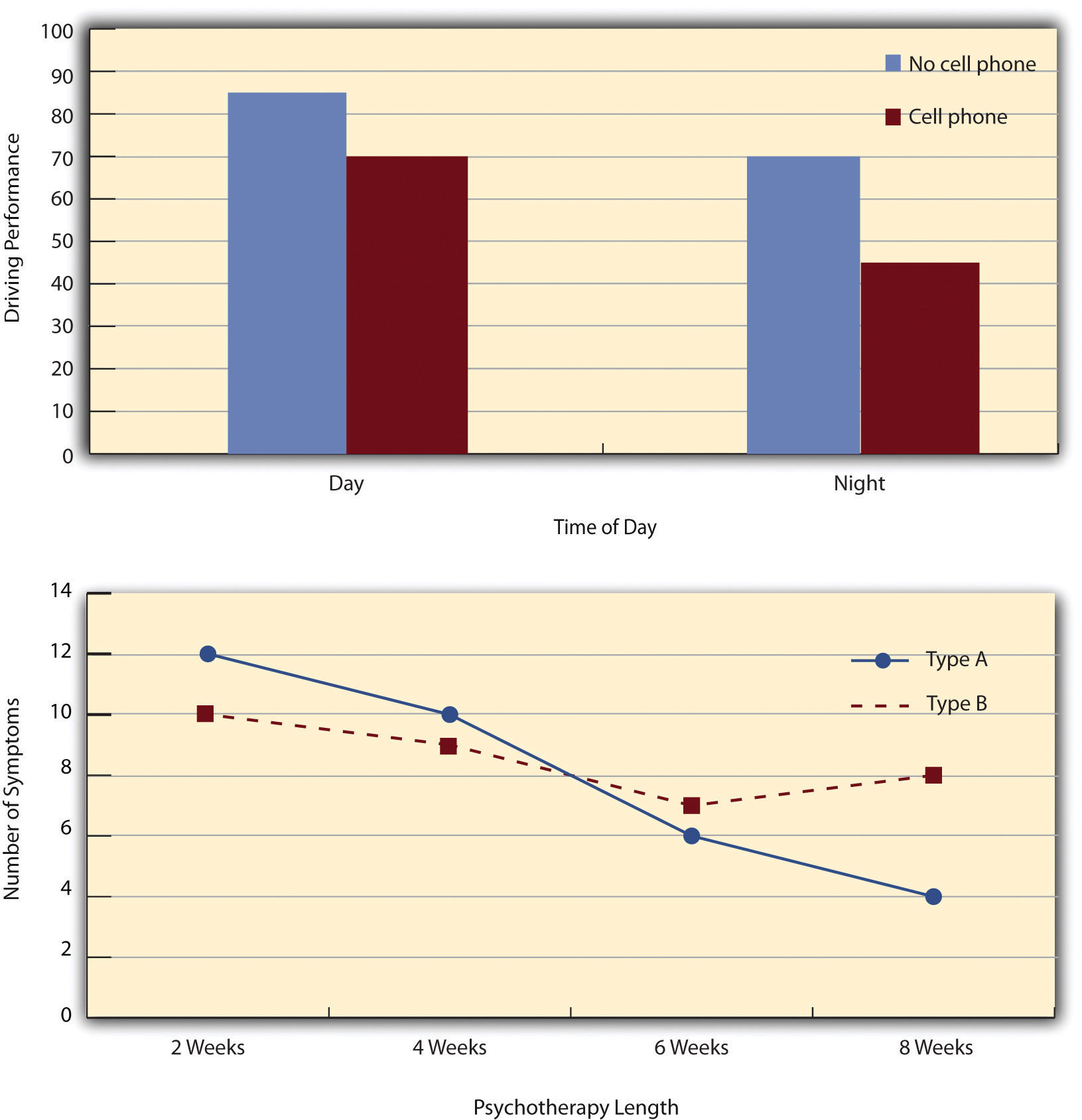

The results of factorial experiments with two independent variables can be graphed by representing one independent variable on the x- axis and representing the other by using different kinds of bars or lines. (The y- axis is always reserved for the dependent variable.) Figure 8.4 “Two Ways to Plot the Results of a Factorial Experiment With Two Independent Variables” shows results for two hypothetical factorial experiments. The top panel shows the results of a 2 × 2 design. Time of day (day vs. night) is represented by different locations on the x- axis, and cell phone use (no vs. yes) is represented by different-colored bars. (It would also be possible to represent cell phone use on the x- axis and time of day as different-colored bars. The choice comes down to which way seems to communicate the results most clearly.) The bottom panel of Figure 8.4 “Two Ways to Plot the Results of a Factorial Experiment With Two Independent Variables” shows the results of a 4 × 2 design in which one of the variables is quantitative. This variable, psychotherapy length, is represented along the x- axis, and the other variable (psychotherapy type) is represented by differently formatted lines. This is a line graph rather than a bar graph because the variable on the x- axis is quantitative with a small number of distinct levels.

Figure 8.4 Two Ways to Plot the Results of a Factorial Experiment With Two Independent Variables

Main Effects and Interactions

In factorial designs, there are two kinds of results that are of interest: main effects and interaction effects (which are also called just “interactions”). A main effect is the statistical relationship between one independent variable and a dependent variable—averaging across the levels of the other independent variable. Thus there is one main effect to consider for each independent variable in the study. The top panel of Figure 8.4 “Two Ways to Plot the Results of a Factorial Experiment With Two Independent Variables” shows a main effect of cell phone use because driving performance was better, on average, when participants were not using cell phones than when they were. The blue bars are, on average, higher than the red bars. It also shows a main effect of time of day because driving performance was better during the day than during the night—both when participants were using cell phones and when they were not. Main effects are independent of each other in the sense that whether or not there is a main effect of one independent variable says nothing about whether or not there is a main effect of the other. The bottom panel of Figure 8.4 “Two Ways to Plot the Results of a Factorial Experiment With Two Independent Variables” , for example, shows a clear main effect of psychotherapy length. The longer the psychotherapy, the better it worked. But it also shows no overall advantage of one type of psychotherapy over the other.

There is an interaction effect (or just “interaction”) when the effect of one independent variable depends on the level of another. Although this might seem complicated, you have an intuitive understanding of interactions already. It probably would not surprise you, for example, to hear that the effect of receiving psychotherapy is stronger among people who are highly motivated to change than among people who are not motivated to change. This is an interaction because the effect of one independent variable (whether or not one receives psychotherapy) depends on the level of another (motivation to change). Schnall and her colleagues also demonstrated an interaction because the effect of whether the room was clean or messy on participants’ moral judgments depended on whether the participants were low or high in private body consciousness. If they were high in private body consciousness, then those in the messy room made harsher judgments. If they were low in private body consciousness, then whether the room was clean or messy did not matter.

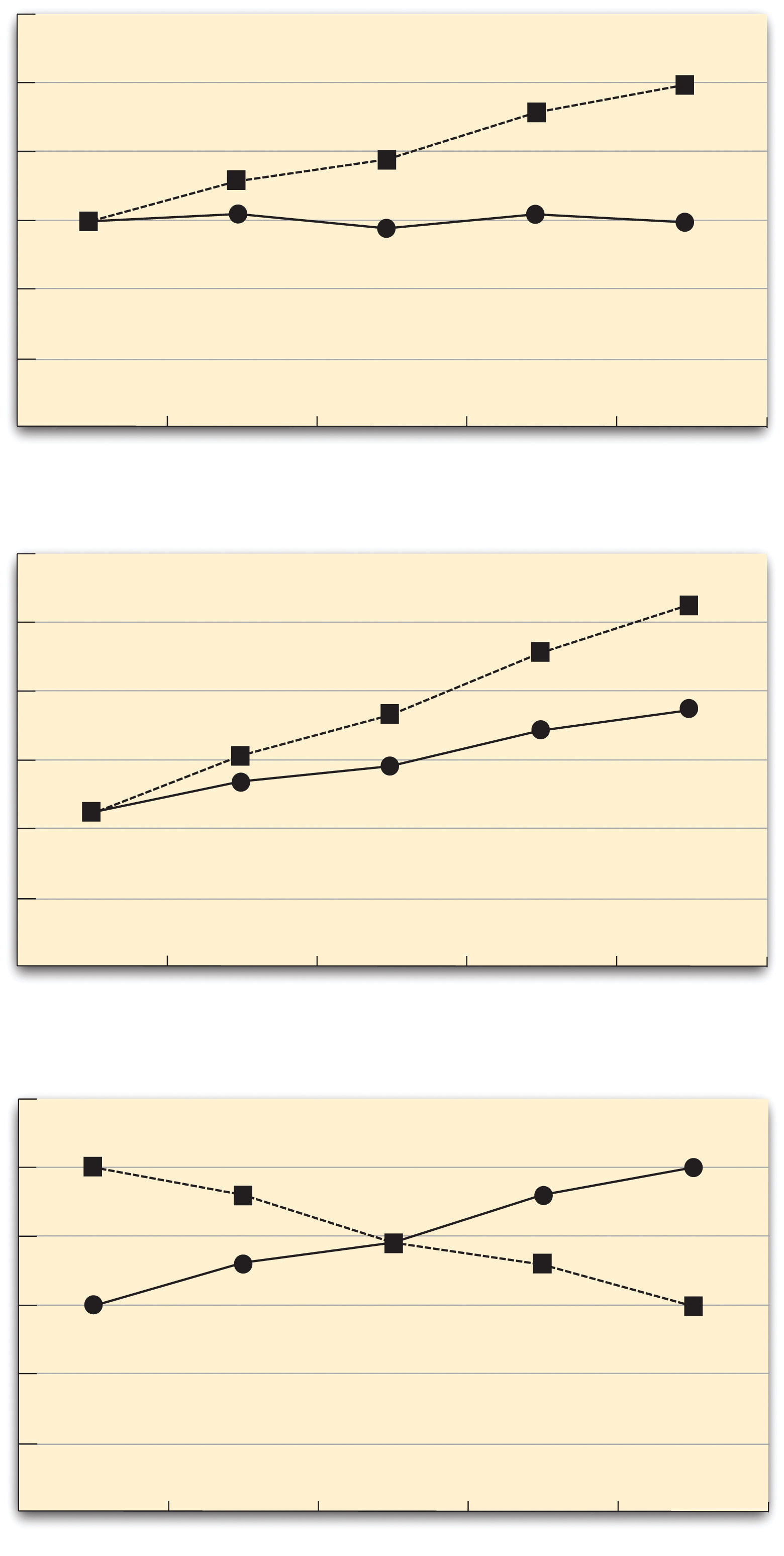

The effect of one independent variable can depend on the level of the other in different ways. This is shown in Figure 8.5 “Bar Graphs Showing Three Types of Interactions” . In the top panel, one independent variable has an effect at one level of the second independent variable but no effect at the others. (This is much like the study of Schnall and her colleagues where there was an effect of disgust for those high in private body consciousness but not for those low in private body consciousness.) In the middle panel, one independent variable has a stronger effect at one level of the second independent variable than at the other level. This is like the hypothetical driving example where there was a stronger effect of using a cell phone at night than during the day. In the bottom panel, one independent variable again has an effect at both levels of the second independent variable, but the effects are in opposite directions. Figure 8.5 “Bar Graphs Showing Three Types of Interactions” shows the strongest form of this kind of interaction, called a crossover interaction . One example of a crossover interaction comes from a study by Kathy Gilliland on the effect of caffeine on the verbal test scores of introverts and extroverts (Gilliland, 1980). Introverts perform better than extroverts when they have not ingested any caffeine. But extroverts perform better than introverts when they have ingested 4 mg of caffeine per kilogram of body weight. Figure 8.6 “Line Graphs Showing Three Types of Interactions” shows examples of these same kinds of interactions when one of the independent variables is quantitative and the results are plotted in a line graph. Note that in a crossover interaction, the two lines literally “cross over” each other.

Figure 8.5 Bar Graphs Showing Three Types of Interactions

In the top panel, one independent variable has an effect at one level of the second independent variable but not at the other. In the middle panel, one independent variable has a stronger effect at one level of the second independent variable than at the other. In the bottom panel, one independent variable has the opposite effect at one level of the second independent variable than at the other.

Figure 8.6 Line Graphs Showing Three Types of Interactions

In many studies, the primary research question is about an interaction. The study by Brown and her colleagues was inspired by the idea that people with hypochondriasis are especially attentive to any negative health-related information. This led to the hypothesis that people high in hypochondriasis would recall negative health-related words more accurately than people low in hypochondriasis but recall non-health-related words about the same as people low in hypochondriasis. And of course this is exactly what happened in this study.

Key Takeaways

- Researchers often include multiple independent variables in their experiments. The most common approach is the factorial design, in which each level of one independent variable is combined with each level of the others to create all possible conditions.

- In a factorial design, the main effect of an independent variable is its overall effect averaged across all other independent variables. There is one main effect for each independent variable.

- There is an interaction between two independent variables when the effect of one depends on the level of the other. Some of the most interesting research questions and results in psychology are specifically about interactions.

- Practice: Return to the five article titles presented at the beginning of this section. For each one, identify the independent variables and the dependent variable.

- Practice: Create a factorial design table for an experiment on the effects of room temperature and noise level on performance on the SAT. Be sure to indicate whether each independent variable will be manipulated between subjects or within subjects and explain why.

Brown, H. D., Kosslyn, S. M., Delamater, B., Fama, A., & Barsky, A. J. (1999). Perceptual and memory biases for health-related information in hypochondriacal individuals. Journal of Psychosomatic Research , 47 , 67–78.

Gilliland, K. (1980). The interactive effect of introversion-extroversion with caffeine induced arousal on verbal performance. Journal of Research in Personality , 14 , 482–492.

Research Methods in Psychology Copyright © 2016 by University of Minnesota is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

15 Independent and Dependent Variable Examples

Dave Cornell (PhD)

Dr. Cornell has worked in education for more than 20 years. His work has involved designing teacher certification for Trinity College in London and in-service training for state governments in the United States. He has trained kindergarten teachers in 8 countries and helped businessmen and women open baby centers and kindergartens in 3 countries.

Learn about our Editorial Process

Chris Drew (PhD)

This article was peer-reviewed and edited by Chris Drew (PhD). The review process on Helpful Professor involves having a PhD level expert fact check, edit, and contribute to articles. Reviewers ensure all content reflects expert academic consensus and is backed up with reference to academic studies. Dr. Drew has published over 20 academic articles in scholarly journals. He is the former editor of the Journal of Learning Development in Higher Education and holds a PhD in Education from ACU.

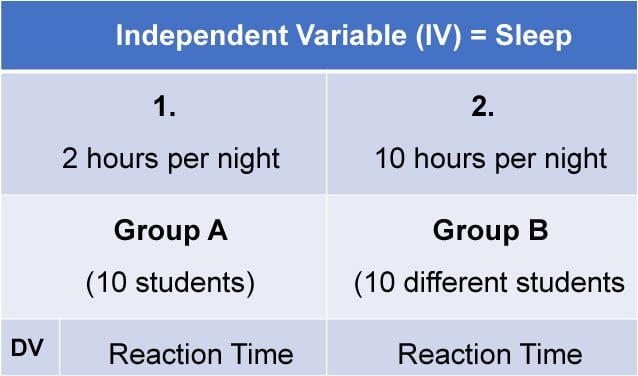

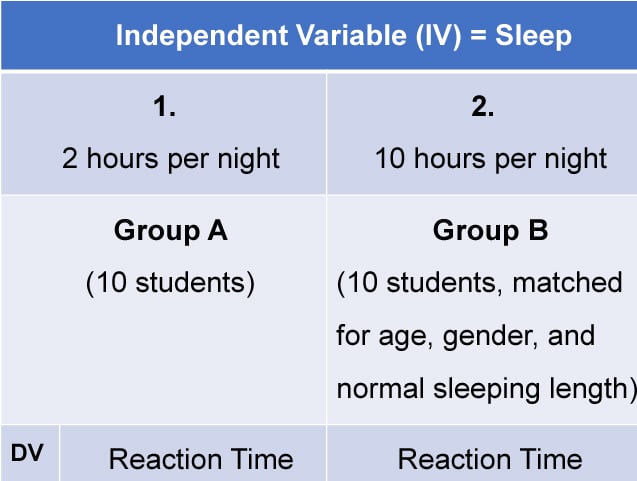

An independent variable (IV) is what is manipulated in a scientific experiment to determine its effect on the dependent variable (DV).

By varying the level of the independent variable and observing associated changes in the dependent variable, a researcher can conclude whether the independent variable affects the dependent variable or not.

This can provide very valuable information when studying just about any subject.

Because the researcher controls the level of the independent variable, it can be determined if the independent variable has a causal effect on the dependent variable.

The term causation is vitally important. Scientists want to know what causes changes in the dependent variable. The only way to do that is to manipulate the independent variable and observe any changes in the dependent variable.

Definition of Independent and Dependent Variables

The independent variable and dependent variable are used in a very specific type of scientific study called the experiment .

Although there are many variations of the experiment, generally speaking, it involves either the presence or absence of the independent variable and the observation of what happens to the dependent variable.

The research participants are randomly assigned to either receive the independent variable (called the treatment condition), or not receive the independent variable (called the control condition).

Other variations of an experiment might include having multiple levels of the independent variable.

If the independent variable affects the dependent variable, then it should be possible to observe changes in the dependent variable based on the presence or absence of the independent variable.

Of course, there are a lot of issues to consider when conducting an experiment, but these are the basic principles.

These concepts should not be confused with predictor and outcome variables .

Examples of Independent and Dependent Variables

1. gatorade and improved athletic performance.

A sports medicine researcher has been hired by Gatorade to test the effects of its sports drink on athletic performance. The company wants to claim that when an athlete drinks Gatorade, their performance will improve.

If they can back up that claim with hard scientific data, that would be great for sales.

So, the researcher goes to a nearby university and randomly selects both male and female athletes from several sports: track and field, volleyball, basketball, and football. Each athlete will run on a treadmill for one hour while their heart rate is tracked.

All of the athletes are given the exact same amount of liquid to consume 30-minutes before and during their run. Half are given Gatorade, and the other half are given water, but no one knows what they are given because both liquids have been colored.

In this example, the independent variable is Gatorade, and the dependent variable is heart rate.

2. Chemotherapy and Cancer

A hospital is investigating the effectiveness of a new type of chemotherapy on cancer. The researchers identified 120 patients with relatively similar types of cancerous tumors in both size and stage of progression.

The patients are randomly assigned to one of three groups: one group receives no chemotherapy, one group receives a low dose of chemotherapy, and one group receives a high dose of chemotherapy.

Each group receives chemotherapy treatment three times a week for two months, except for the no-treatment group. At the end of two months, the doctors measure the size of each patient’s tumor.

In this study, despite the ethical issues (remember this is just a hypothetical example), the independent variable is chemotherapy, and the dependent variable is tumor size.

3. Interior Design Color and Eating Rate

A well-known fast-food corporation wants to know if the color of the interior of their restaurants will affect how fast people eat. Of course, they would prefer that consumers enter and exit quickly to increase sales volume and profit.

So, they rent space in a large shopping mall and create three different simulated restaurant interiors of different colors. One room is painted mostly white with red trim and seats; one room is painted mostly white with blue trim and seats; and one room is painted mostly white with off-white trim and seats.

Next, they randomly select shoppers on Saturdays and Sundays to eat for free in one of the three rooms. Each shopper is given a box of the same food and drink items and sent to one of the rooms. The researchers record how much time elapses from the moment they enter the room to the moment they leave.

The independent variable is the color of the room, and the dependent variable is the amount of time spent in the room eating.

4. Hair Color and Attraction

A large multinational cosmetics company wants to know if the color of a woman’s hair affects the level of perceived attractiveness in males. So, they use Photoshop to manipulate the same image of a female by altering the color of her hair: blonde, brunette, red, and brown.

Next, they randomly select university males to enter their testing facilities. Each participant sits in front of a computer screen and responds to questions on a survey. At the end of the survey, the screen shows one of the photos of the female.

At the same time, software on the computer that utilizes the computer’s camera is measuring each male’s pupil dilation. The researchers believe that larger dilation indicates greater perceived attractiveness.

The independent variable is hair color, and the dependent variable is pupil dilation.

5. Mozart and Math

After many claims that listening to Mozart will make you smarter, a group of education specialists decides to put it to the test. So, first, they go to a nearby school in a middle-class neighborhood.

During the first three months of the academic year, they randomly select some 5th-grade classrooms to listen to Mozart during their lessons and exams. Other 5 th grade classrooms will not listen to any music during their lessons and exams.

The researchers then compare the scores of the exams between the two groups of classrooms.

Although there are a lot of obvious limitations to this hypothetical, it is the first step.

The independent variable is Mozart, and the dependent variable is exam scores.

6. Essential Oils and Sleep

A company that specializes in essential oils wants to examine the effects of lavender on sleep quality. They hire a sleep research lab to conduct the study. The researchers at the lab have their usual test volunteers sleep in individual rooms every night for one week.

The conditions of each room are all exactly the same, except that half of the rooms have lavender released into the rooms and half do not. While the study participants are sleeping, their heart rates and amount of time spent in deep sleep are recorded with high-tech equipment.

At the end of the study, the researchers compare the total amount of time spent in deep sleep of the lavender-room participants with the no lavender-room participants.

The independent variable in this sleep study is lavender, and the dependent variable is the total amount of time spent in deep sleep.

7. Teaching Style and Learning

A group of teachers is interested in which teaching method will work best for developing critical thinking skills.

So, they train a group of teachers in three different teaching styles : teacher-centered, where the teacher tells the students all about critical thinking; student-centered, where the students practice critical thinking and receive teacher feedback; and AI-assisted teaching, where the teacher uses a special software program to teach critical thinking.

At the end of three months, all the students take the same test that assesses critical thinking skills. The teachers then compare the scores of each of the three groups of students.

The independent variable is the teaching method, and the dependent variable is performance on the critical thinking test.

8. Concrete Mix and Bridge Strength

A chemicals company has developed three different versions of their concrete mix. Each version contains a different blend of specially developed chemicals. The company wants to know which version is the strongest.

So, they create three bridge molds that are identical in every way. They fill each mold with one of the different concrete mixtures. Next, they test the strength of each bridge by placing progressively more weight on its center until the bridge collapses.

In this study, the independent variable is the concrete mixture, and the dependent variable is the amount of weight at collapse.

9. Recipe and Consumer Preferences

People in the pizza business know that the crust is key. Many companies, large and small, will keep their recipe a top secret. Before rolling out a new type of crust, the company decides to conduct some research on consumer preferences.

The company has prepared three versions of their crust that vary in crunchiness, they are: a little crunchy, very crunchy, and super crunchy. They already have a pool of consumers that fit their customer profile and they often use them for testing.

Each participant sits in a booth and takes a bite of one version of the crust. They then indicate how much they liked it by pressing one of 5 buttons: didn’t like at all, liked, somewhat liked, liked very much, loved it.

The independent variable is the level of crust crunchiness, and the dependent variable is how much it was liked.

10. Protein Supplements and Muscle Mass

A large food company is considering entering the health and nutrition sector. Their R&D food scientists have developed a protein supplement that is designed to help build muscle mass for people that work out regularly.

The company approaches several gyms near its headquarters. They enlist the cooperation of over 120 gym rats that work out 5 days a week. Their muscle mass is measured, and only those with a lower level are selected for the study, leaving a total of 80 study participants.

They randomly assign half of the participants to take the recommended dosage of their supplement every day for three months after each workout. The other half takes the same amount of something that looks the same but actually does nothing to the body.

At the end of three months, the muscle mass of all participants is measured.

The independent variable is the supplement, and the dependent variable is muscle mass.

11. Air Bags and Skull Fractures

In the early days of airbags , automobile companies conducted a great deal of testing. At first, many people in the industry didn’t think airbags would be effective at all. Fortunately, there was a way to test this theory objectively.

In a representative example: Several crash cars were outfitted with an airbag, and an equal number were not. All crash cars were of the same make, year, and model. Then the crash experts rammed each car into a crash wall at the same speed. Sensors on the crash dummy skulls allowed for a scientific analysis of how much damage a human skull would incur.

The amount of skull damage of dummies in cars with airbags was then compared with those without airbags.

The independent variable was the airbag and the dependent variable was the amount of skull damage.

12. Vitamins and Health

Some people take vitamins every day. A group of health scientists decides to conduct a study to determine if taking vitamins improves health.

They randomly select 1,000 people that are relatively similar in terms of their physical health. The key word here is “similar.”

Because the scientists have an unlimited budget (and because this is a hypothetical example, all of the participants have the same meals delivered to their homes (breakfast, lunch, and dinner), every day for one year.

In addition, the scientists randomly assign half of the participants to take a set of vitamins, supplied by the researchers every day for 1 year. The other half do not take the vitamins.

At the end of one year, the health of all participants is assessed, using blood pressure and cholesterol level as the key measurements.

In this highly unrealistic study, the independent variable is vitamins, and the dependent variable is health, as measured by blood pressure and cholesterol levels.

13. Meditation and Stress

Does practicing meditation reduce stress? If you have ever wondered if this is true or not, then you are in luck because there is a way to know one way or the other.

All we have to do is find 90 people that are similar in age, stress levels, diet and exercise, and as many other factors as we can think of.

Next, we randomly assign each person to either practice meditation every day, three days a week, or not at all. After three months, we measure the stress levels of each person and compare the groups.

How should we measure stress? Well, there are a lot of ways. We could measure blood pressure, or the amount of the stress hormone cortisol in their blood, or by using a paper and pencil measure such as a questionnaire that asks them how much stress they feel.

In this study, the independent variable is meditation and the dependent variable is the amount of stress (however it is measured).

14. Video Games and Aggression

When video games started to become increasingly graphic, it was a huge concern in many countries in the world. Educators, social scientists, and parents were shocked at how graphic games were becoming.

Since then, there have been hundreds of studies conducted by psychologists and other researchers. A lot of those studies used an experimental design that involved males of various ages randomly assigned to play a graphic or non-graphic video game.

Afterward, their level of aggression was measured via a wide range of methods, including direct observations of their behavior, their actions when given the opportunity to be aggressive, or a variety of other measures.

So many studies have used so many different ways of measuring aggression.

In these experimental studies, the independent variable was graphic video games, and the dependent variable was observed level of aggression.

15. Vehicle Exhaust and Cognitive Performance

Car pollution is a concern for a lot of reasons. In addition to being bad for the environment, car exhaust may cause damage to the brain and impair cognitive performance.

One way to examine this possibility would be to conduct an animal study. The research would look something like this: laboratory rats would be raised in three different rooms that varied in the degree of car exhaust circulating in the room: no exhaust, little exhaust, or a lot of exhaust.

After a certain period of time, perhaps several months, the effects on cognitive performance could be measured.

One common way of assessing cognitive performance in laboratory rats is by measuring the amount of time it takes to run a maze successfully. It would also be possible to examine the physical effects of car exhaust on the brain by conducting an autopsy.

In this animal study, the independent variable would be car exhaust and the dependent variable would be amount of time to run a maze.

Read Next: Extraneous Variables Examples

The experiment is an incredibly valuable way to answer scientific questions regarding the cause and effect of certain variables. By manipulating the level of an independent variable and observing corresponding changes in a dependent variable, scientists can gain an understanding of many phenomena.

For example, scientists can learn if graphic video games make people more aggressive, if mediation reduces stress, if Gatorade improves athletic performance, and even if certain medical treatments can cure cancer.

The determination of causality is the key benefit of manipulating the independent variable and them observing changes in the dependent variable. Other research methodologies can reveal factors that are related to the dependent variable or associated with the dependent variable, but only when the independent variable is controlled by the researcher can causality be determined.

Ferguson, C. J. (2010). Blazing Angels or Resident Evil? Can graphic video games be a force for good? Review of General Psychology, 14 (2), 68-81. https://doi.org/10.1037/a0018941

Flannelly, L. T., Flannelly, K. J., & Jankowski, K. R. (2014). Independent, dependent, and other variables in healthcare and chaplaincy research. Journal of Health Care Chaplaincy , 20 (4), 161–170. https://doi.org/10.1080/08854726.2014.959374

Manocha, R., Black, D., Sarris, J., & Stough, C.(2011). A randomized, controlled trial of meditation for work stress, anxiety and depressed mood in full-time workers. Evidence-Based Complementary and Alternative Medicine , vol. 2011, Article ID 960583. https://doi.org/10.1155/2011/960583

Rumrill, P. D., Jr. (2004). Non-manipulation quantitative designs. Work (Reading, Mass.) , 22 (3), 255–260.

Taylor, J. M., & Rowe, B. J. (2012). The “Mozart Effect” and the mathematical connection, Journal of College Reading and Learning, 42 (2), 51-66. https://doi.org/10.1080/10790195.2012.10850354

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 23 Achieved Status Examples

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 25 Defense Mechanisms Examples

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 15 Theory of Planned Behavior Examples

- Dave Cornell (PhD) https://helpfulprofessor.com/author/dave-cornell-phd/ 18 Adaptive Behavior Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd-2/ 23 Achieved Status Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd-2/ 15 Ableism Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd-2/ 25 Defense Mechanisms Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd-2/ 15 Theory of Planned Behavior Examples

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Design of Experiments with Multiple Independent Variables: A Resource Management Perspective on Complete and Reduced Factorial Designs

Linda m collins, john j dziak.

- Author information

- Copyright and License information

Correspondence may be sent to Linda M. Collins, The Methodology Center, Penn State, 204 E. Calder Way, Suite 400, State College, PA 16801; [email protected]

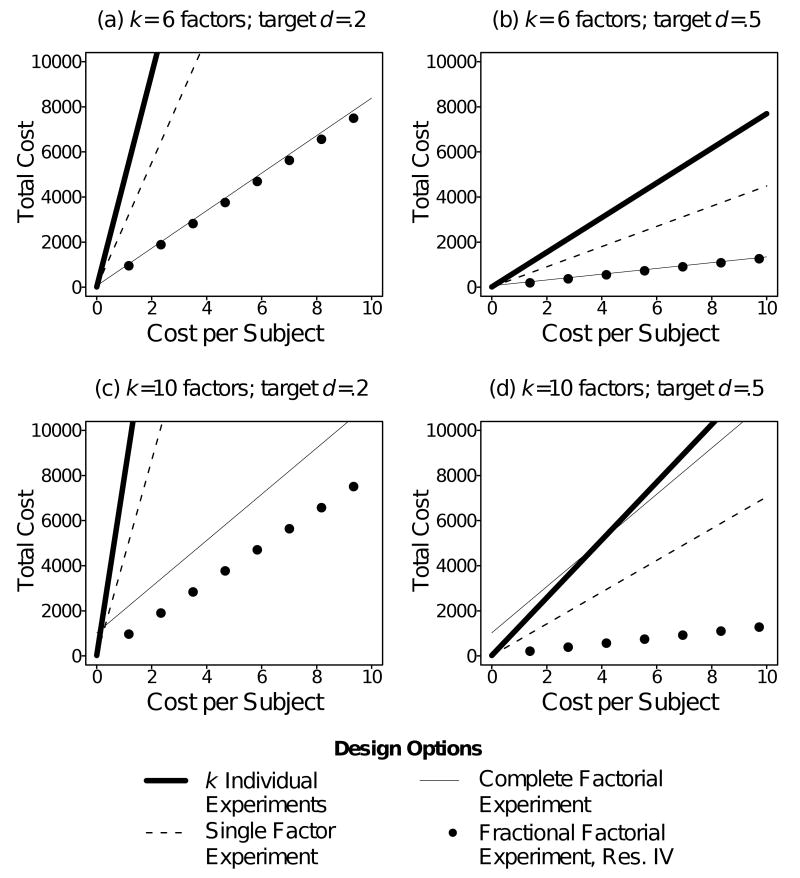

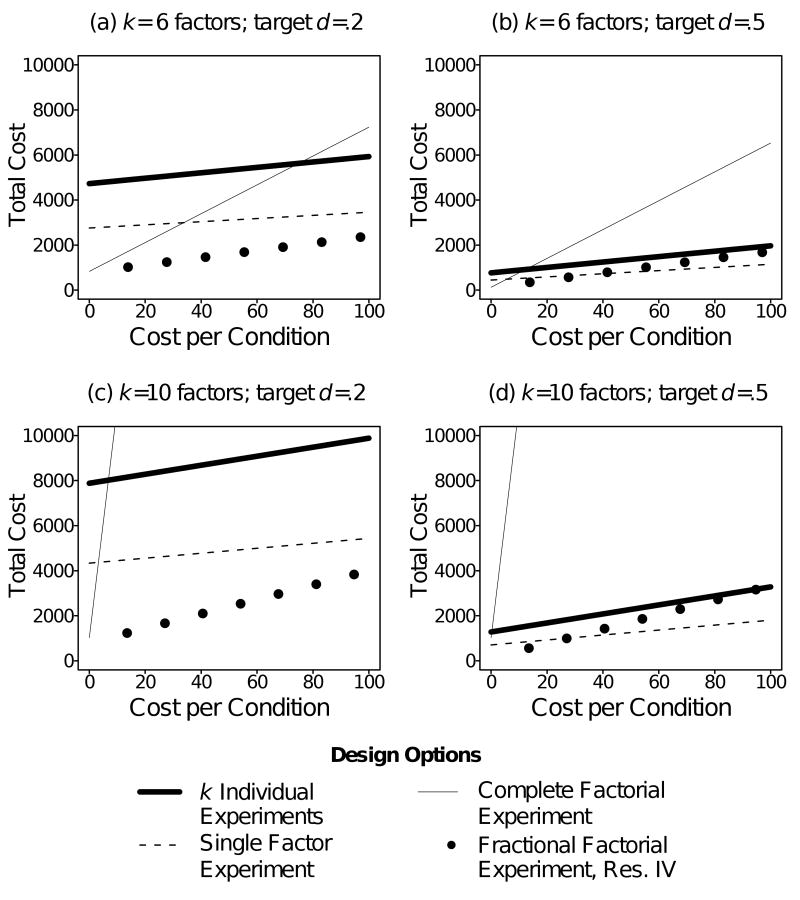

An investigator who plans to conduct experiments with multiple independent variables must decide whether to use a complete or reduced factorial design. This article advocates a resource management perspective on making this decision, in which the investigator seeks a strategic balance between service to scientific objectives and economy. Considerations in making design decisions include whether research questions are framed as main effects or simple effects; whether and which effects are aliased (confounded) in a particular design; the number of experimental conditions that must be implemented in a particular design and the number of experimental subjects the design requires to maintain the desired level of statistical power; and the costs associated with implementing experimental conditions and obtaining experimental subjects. In this article four design options are compared: complete factorial, individual experiments, single factor, and fractional factorial designs. Complete and fractional factorial designs and single factor designs are generally more economical than conducting individual experiments on each factor. Although relatively unfamiliar to behavioral scientists, fractional factorial designs merit serious consideration because of their economy and versatility.

Keywords: experimental design, fractional factorial designs, factorial designs, reduced designs, resource management

Suppose a scientist is interested in investigating the effects of k independent variables, where k > 1. For example, Bolger and Amarel (2007) investigated the hypothesis that the effect of peer social support on performance stress can be positive or negative, depending on whether the way the peer social support is given enhances or degrades self-efficacy. Their experiment could be characterized as involving four factors: support offered (yes or no), nature of support (visible or indirect), message from a confederate that recipient of support is unable to handle the task alone (yes or no), and message that a confederate would be unable to handle the task (yes or no).

One design possibility when k > 1 independent variables are to be examined is a factorial experiment. In factorial research designs, experimental conditions are formed by systematically varying the levels of two or more independent variables, or factors. For example, in the classic two × two factorial design there are two factors each with two levels. The two factors are crossed, that is, all combinations of levels of the two factors are formed, to create a design with four experimental conditions. More generally, factorial designs can include k ≥ 2 factors and can incorporate two or more levels per factor. With four two-level variables, such as in Bolger and Amarel (2007) , a complete factorial experiment would involve 2 × 2 × 2 × 2 = 16 experimental conditions. One advantage of factorial designs, as compared to simpler experiments that manipulate only a single factor at a time, is the ability to examine interactions between factors. A second advantage of factorial designs is their efficiency with respect to use of experimental subjects; factorial designs require fewer experimental subjects than comparable alternative designs to maintain the same level of statistical power (e.g. Wu & Hamada, 2000 ).

However, a complete factorial experiment is not always an option. In some cases there may be combinations of levels of the factors that would create a nonsensical, toxic, logistically impractical or otherwise undesirable experimental condition. For example, Bolger and Amarel (2007) could not have conducted a complete factorial experiment because some of the combinations of levels of the factors would have been illogical (e.g. no support offered but support was direct). But even when all combinations of factors are reasonable, resource limitations may make implementation of a complete factorial experiment impossible. As the number of factors and levels of factors under consideration increases, the number of experimental conditions that must be implemented in a complete factorial design increases rapidly. The accompanying logistical difficulty and expense may exceed available resources, prompting investigators to seek alternative experimental designs that require fewer experimental conditions.

In this article the term “reduced design” will be used to refer generally to any design approach that involves experimental manipulation of all k independent variables, but includes fewer experimental conditions than a complete factorial design with the same k variables. Reduced designs are often necessary to make simultaneous investigation of multiple independent variables feasible. However, any removal of experimental conditions to form a reduced design has important scientific consequences. The number of effects that can be estimated in an experimental design is limited to one fewer than the number of experimental conditions represented in the design. Therefore, when experimental conditions are removed from a design some effects are combined so that their sum only, not the individual effects, can be estimated. Another way to think of this is that two or more interpretational labels (e.g. main effect of Factor A; interaction between Factor A and Factor B) can be applied to the same source of variation. This phenomenon is known as aliasing (sometimes referred to as confounding, or as collinearity in the regression framework).

Any investigator who wants or needs to examine multiple independent variables is faced with deciding whether to use a complete factorial or a reduced experimental design. The best choice is one that strikes a careful and strategic balance between service to scientific objectives and economy. Weighing a variety of considerations to achieve such a balance, including the exact research questions of interest, the potential impact of aliasing on interpretation of results, and the costs associated with each design option, is the topic of this article.

Objectives of this article

This article has two objectives. The first objective is to propose that a resource management perspective may be helpful to investigators who are choosing a design for an experiment that will involve several independent variables. The resource management perspective assumes that an experiment is motivated by a finite set of research questions and that these questions can be prioritized for decision making purposes. Then according to this perspective the preferred experimental design is the one that, in relation to the resource requirements of the design, offers the greatest potential to advance the scientific agenda motivating the experiment. Four general design alternatives will be considered from a resource management perspective: complete factorial designs and three types of reduced designs. One of the reduced designs, the fractional factorial, is used routinely in engineering but currently unfamiliar to many social and behavioral scientists. In our view fractional factorial designs merit consideration by social and behavioral scientists alongside other more commonly used reduced designs. Accordingly, a second objective of this article is to offer a brief introductory tutorial on fractional factorial designs, in the hope of assisting investigators who wish to evaluate whether these designs might be of use in their research.

Overview of four design alternatives

Throughout this article, it is assumed that an investigator is interested in examining the effects of k independent variables, each of which could correspond to a factor in a factorial experiment. It is not necessarily a foregone conclusion that the k independent variables must be examined in a single experiment; they may represent a set of questions comprising a program of research, or a set of features or components comprising a behavioral intervention program. It is assumed that the k factors can be independently manipulated, and that no possible combination of the factors would create an experimental condition that cannot or should not be implemented. For the sake of simplicity, it is also assumed that each of the k factors has only two levels, such as On/Off or Yes/No. Factorial and fractional factorial designs can be done with factors having any number of levels, but two-level factors allow the most straightforward interpretation and largest statistical power, especially for interactions.

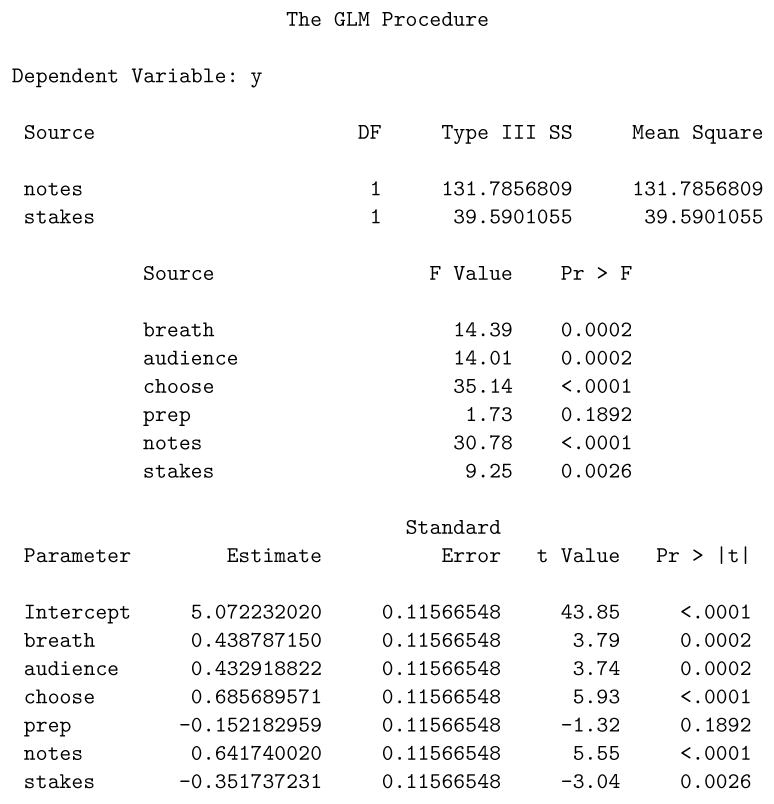

In this section the four different design alternatives considered in this article are introduced using a hypothetical example based on the following scenario: An investigator is to conduct a study on anxiety related to public speaking (this example is modeled very loosely on Bolger and Amarel, 2007 ). There are three factors of theoretical interest to the investigator, each with two levels, On or Off. The factors are whether or not (1) the subject is allowed to choose a topic for the presentation ( choose ); (2) the subject is taught a deep-breathing relaxation exercise to perform just before giving the presentation ( breath ); and (3) the subject is provided with extra time to prepare for the speech ( prep ). This small hypothetical example will be useful in illustrating some initial key points of comparison among the design alternatives. Later in the article the hypothetical example will be extended to include more factors so that some additional points can be illustrated.

The first alternative considered here is a complete factorial design. The remaining alternatives considered are reduced designs, each of which can be viewed as a subset of the complete factorial.

Complete factorial designs

Factorial designs may be denoted using the exponential notation 2 k , which compactly expresses that k factors with 2 levels each are crossed, resulting in 2 k experimental conditions (sometimes called “cells”). Each experimental condition represents a unique combination of levels of the k factors. In the hypothetical example a complete factorial design would be expressed as 2 3 (or equivalently, 2 × 2 × 2) and would involve eight experimental conditions. Table 1 shows these eight experimental conditions along with effect coding. The design enables estimation of seven effects: three main effects, three two-way interactions, and a single three-way interaction.

Table 1. Effect Coding for Complete Factorial Design with Three 2-Level Factors.

Table 1 illustrates one feature of complete factorial designs in which an equal number of subjects is assigned to each experimental condition, namely the balance property. A design is balanced if each level of each factor appears in the design the same number of times and is assigned to the same number of subjects ( Hays, 1994 ; Wu & Hamada, 2000 ). In a balanced design the main effects and interactions are orthogonal, so that each one is estimated and tested as if it were the only one under consideration, with very little loss of efficiency due to the presence of other factors 1 . (Effects may still be orthogonal even in unbalanced designs if certain proportionality conditions are met; see e.g. Hays, 1994 , p. 475.) The balance property is evident in Table 1 ; each level of each factor appears exactly four times.

Individual experiments

The individual experiments approach requires conducting a two-condition experiment for each independent variable, that is, k separate experiments. In the example this would require conducting three different experiments, involving a total of six experimental conditions. In one experiment, a condition in which subjects are allowed to choose the topic of the presentation would be compared to one in which subjects are assigned a topic; in a second experiment, a condition in which subjects are taught a relaxation exercise would be compared to one in which no relaxation exercise is taught; in a third experiment, a condition in which subjects are given ample time to prepare in advance would be compared to one in which subjects are given little preparation time. The subset of experimental conditions from the complete three-factor factorial experiment in Table 1 that would be implemented in the individual experiments approach is depicted in the first section of Table 2 . This design, considered as a whole, is not balanced. Each of the independent variables is set to On once and set to Off five times.

Table 2. Effect Coding for Reduced Designs That Comprise Subsets of the Design in Table 1 .

Single factor designs in which the factor has many levels.

In the single factor approach a single experiment is performed in which various combinations of levels of the independent variables are selected to form one nominal or ordinal categorical factor with several qualitatively distinct levels. West, Aiken, and Todd (1993 ; West & Aiken, 1997 ) reviewed three variations of the single factor design that are used frequently, particularly in research on behavioral interventions for prevention and treatment. In the comparative treatment design there are k +1 experimental conditions: k experimental conditions in which one independent variable is set to On and all the others to Off, plus a single control condition in which all independent variables are set to Off. This approach is similar to conducting separate individual experiments, except that a shared control group is used for all factors. The second section of Table 2 shows the four experimental conditions that would comprise a comparative treatment design in the hypothetical example. These are the same experimental conditions that appear in the individual experiments design.

By contrast, for the constructive treatment design an intervention is “built” by combining successive features. For example, an investigator interested in developing a treatment to reduce anxiety might want to assess the effect of allowing the subject to choose a topic, then the incremental effect of also teaching a relaxation exercise, then the incremental effect of allowing extra preparation time. The third section of Table 2 shows the subset of experimental conditions from the complete factorial shown in Table 1 that would be implemented in a three-factor constructive treatment experiment in which first choose is added, followed by breath and then prep . The constructive treatment strategy typically has k +1 experimental conditions but may have fewer or more. The dismantling design, in which the objective is to determine the effect of removing one or more features of an intervention, and other single factor designs are based on similar logic.

Table 2 shows that both the comparative treatment design and the constructive treatment design are unbalanced. In the comparative treatment design, each factor is set to On once and set to Off three times. In the constructive treatment design, choose is set to Off once and to On three times, and prep is set to On once and to Off three times. Other single factor designs are similarly unbalanced.

Fractional factorial designs

The fourth alternative considered in this article is to use a design from the family of fractional factorial designs. A fractional factorial design involves a special, carefully chosen subset, or fraction, of the experimental conditions in a complete factorial design. The bottom section of Table 2 shows a subset of experimental conditions from the complete three-factor factorial design that constitute a fractional factorial design. The experimental conditions in fractional factorial designs are selected so as to preserve the balance property. 2 As Table 2 shows, each level of each factor appears in the design exactly twice.

Fractional factorial designs are represented using an exponential notation based on that used for complete factorial designs. The fractional factorial design in Table 2 would be expressed as 2 3−1 . This notation contains the following information: (a) the corresponding complete factorial design is 2 3 , in other words involves 3 factors, each of which has 2 levels, for a total of 8 experimental conditions; (b) the fractional factorial design involves 2 3−1 = 2 2 = 4 experimental conditions; and (c) this fractional factorial design is a 2 −1 = 1/2 fraction of the complete factorial. Many fractional factorial designs, particularly those with many factors, involve even smaller fractions of the complete factorial.

Aliasing in the individual experiments, single factor, and fractional factorial designs

It was mentioned above that reduced designs involve aliasing of effects. A design's aliasing is evident in its effect coding. When effects are aliased their effect coding is perfectly correlated (whether positively or negatively). Aliasing in the individual experiments approach can be seen by examining the first section of Table 2 . In the experiment examining choose , the effect codes are identical for the main effect of choose and the choose × breath × prep interaction (−1 for experimental condition 1 and 1 for experimental condition 4), and these are perfectly negatively correlated with the effect codes for the choose × breath and choose × prep interactions. Thus these effects are aliased; the effect estimated by this experiment is an aggregate of the main effect of choose and all of the interactions involving choose . (The codes for the remaining effects, namely the main effects of breath and prep and the breath × prep interaction, are constants in this design.) Similarly, in the experiment investigating breath , the main effect and all of the interactions involving breath are aliased, and in the experiment investigating prep , the main effect and all of the interactions involving prep are aliased.

The aliasing in single factor experiments using the comparative treatment strategy is identical to the aliasing in the individual experiments approach. As shown in the second section of Table 2 , for the hypothetical example a comparative treatment experiment would involve experimental conditions 1, 2, 3, and 5, which are the same conditions as in the individual experiments approach. The effects of each factor are assessed by means of the same comparisons; for example, the effect of choose would be assessed by comparing experimental conditions 1 and 5. The primary difference is that only one control condition would be required in the single factor experiment, whereas in the individual experiments approach three control conditions are required.

The constructive treatment strategy is comprised of a different subset of experimental conditions from the full factorial than the individual experiments and comparative treatment approaches. Nevertheless, the aliasing is similar. As the third section of Table 2 shows, the effect of adding choose would be assessed by comparing experimental conditions 1 and 5, so the aliasing would be the same as that in the individual experiment investigating choose discussed above. The cumulative effect of adding breath would be assessed by comparing experimental conditions 5 and 7. The effect codes in these two experimental conditions for the main effect of breath are perfectly (positively or negatively) correlated with those for all of the interactions involving breath , although here the effect codes for the interactions are reversed as compared to the individual experiments and comparative treatment approaches. The same reasoning applies to the effect of prep , which is assessed by comparing experimental conditions 7 and 8.

As the fourth section of Table 2 illustrates, the aliasing in fractional factorial designs is different from the aliasing seen in the individual experiments and single factor approaches. In this fractional factorial design the effect of choose is estimated by comparing the mean of experimental conditions 2 and 3 with the mean of experimental conditions 5 and 8; the effect of breath is estimated by comparing the mean of experimental conditions 3 and 8 to the mean of experimental conditions 2 and 5; and the effect of prep is estimated by comparing the mean of experimental conditions 2 and 8 to the mean of experimental conditions 3 and 5. The effect codes show that the main effect of choose and the breath × prep interaction are aliased. The remaining effects are either orthogonal to the aliased effect or constant. Similarly, the main effect of breath and the choose × prep interaction are aliased, and the main effect of prep and the choose × breath interaction are aliased.

Note that each source of variation in this fractional factorial design has two aliases (e.g. choose and the breath × prep interaction form a single source of variation). This is characteristic of fractional factorial designs that, like this one, are 1/2 fractions. The denominator of the fraction always reveals how many aliases each source of variation has. Thus in a fractional factorial design that is a 1/4 fraction each source of variation has four aliases; in a fractional factorial design that is a 1/8 fraction each source of variation has eight aliases; and so on.

Aliasing and scientific questions

An investigator who is interested in using a reduced design to estimate the effects of k factors faces several considerations. These include: whether the research questions of primary scientific interest concern simple effects or main effects; whether the design's aliasing means that assumptions must be made in order to address the research questions; and how to use aliasing strategically. Each of these considerations is reviewed in this section.

Simple effects and main effects

In this article we have been discussing a situation in which a finite set of k independent variables is under consideration and the individual effects of each of the k variables are of interest. However, the question “Does a particular factor have an effect?” is incomplete; different research questions may involve different types of effects. Let us examine three different research questions concerning the effect of breath in the hypothetical example, and see how they correspond to effects in a factorial design.

Question 1: “Does the factor breath have an effect on the outcome variable when the factors choose and prep are set to Off?”

Question 2: “Will an intervention consisting of only the factors choose and prep set to On be improved if the factor breath is changed from Off to On?”

Question 3: “Does the factor breath have an effect on the outcome variable on average across levels of the other factors?”

In the language of experimental design, Questions 1 and 2 concern simple effects, and Question 3 concerns a main effect. The distinction between simple effects and main effects is subtle but important. A simple effect of a factor is an effect at a particular combination of levels of the remaining factors. There are as many simple effects for each factor as there are combinations of levels of the remaining factors. For example, the simple effect relevant to Question 1 is the conditional effect of changing breath from Off to On, assuming both prep and choose are set to Off. The simple effect relevant to Question 2 is the conditional effect of changing breath from Off to On, assuming both other factors are set to On. Thus although Questions 1 and 2 both are concerned with simple effects of breath , they are concerned with different simple effects.

A significant main effect for a factor is an effect on average across all combinations of levels of the other factors in the experiment. For example, Question 3 is concerned with the main effect of breath , that is, the effect of breath averaged across all combinations of levels of prep and choose . Given a particular set of k factors, there is only one main effect corresponding to each factor.

Simple effects and main effects are not interchangeable, unless we assume that all interactions are negligible. Thus, neither necessarily tells anything about the other. A positive main effect does not imply that all of the simple effects are nonzero or even nonnegative. It is even possible (due to a large interaction) for one simple effect to be positive, another simple effect for the same factor to be negative, and the main (averaged) effect to be zero. In the public speaking example, the answer to Question 2 does not imply anything about whether an intervention consisting of breath alone would be effective, or whether there would be an incremental effect of breath if it were added to an intervention initially consisting of choose alone.

Research questions, aliasing, and assumptions

Suppose an investigator is interested in addressing Question 1 above. The answer to this research question depends only upon the particular simple effect of breath when both of the other factors are set to Off. The research question does not ask whether any observed differences are attributable to the main effect of breath , the breath × prep interaction, the breath × choose interaction, the breath × prep × choose interaction, or some combination of the aliased effects. The answer to Question 2, which also concerns a simple effect, depends only upon whether changing breath from Off to On has an effect on the outcome variable when prep and choose are set to On; it does not depend on establishing whether any other effects in the model are present or absent. As Kirk (1968) pointed out, simple effects “represent a partition of a treatment sum of squares plus an interaction sum of squares” (p. 380). Thus, although there is aliasing in the individual experiments and comparative treatment strategies, these designs are appropriate for addressing Question 1, because the aliased effects correspond exactly to the effect of interest in Question 1. Similarly, although there is aliasing in the constructive treatment strategy, this design is appropriate for addressing Question 2. In other words, although in our view it is important to be aware of aliasing whenever considering a reduced experimental design, the aliasing ultimately is of little consequence if the aliased effect as a package is of primary scientific interest.

The individual experiments and comparative treatment strategies would not be appropriate for addressing Question 2. The constructive treatment strategy could address Question 1, but only if breath was the first factor set high, with the others low, in the first non-control group. The conclusions drawn from these experiments would be limited to simple effects and cannot be extended to main effects or interactions.

The situation is different if a reduced design is to be used to estimate main effects. Suppose an investigator is interested in addressing Question 3, that is, is interested in the main effect of breath . As was discussed above, in the individual experiments, comparative treatment, and constructive treatment approaches the main effect of breath is aliased with all the interactions involving breath . It is appropriate to use these designs to draw conclusions about the main effect of breath only if it is reasonable to assume that all of the interactions involving breath up to the k -way interaction are negligible. Then any effect of breath observed using an individual experiment or a single factor design is attributable to the main effect.

The difference in the aliasing structure of fractional factorial designs as compared to individual experiments and single factor designs becomes particularly salient when the primary scientific questions that motivate an experiment require estimating main effects as opposed to simple effects, and when larger numbers of factors are involved. However, the small three-factor fractional factorial experiment in Table 2 can be used to demonstrate the logic behind the choice of a particular fractional factorial design. In the design in Table 2 the main effect of breath is aliased with one two-way interaction: prep × choose . If it is reasonable to assume that this two-way interaction is negligible, then it is appropriate to use this fractional factorial design to estimate the main effect of breath . In general, investigators considering using a fractional factorial design seek a design in which main effects and scientifically important interactions are aliased only with effects that can be assumed to be negligible.

Many fractional factorial designs in which there are four or more factors require many fewer and much weaker assumptions for estimation of main effects than those required by the small hypothetical example used here. For these larger problems it is possible to identify a fractional factorial design that uses fewer experimental conditions than the complete design but in which main effects and also two-way interaction are aliased only with interactions involving three or more factors. Many of these designs also enable identification of some three-way interactions that are to be aliased only with interactions involving four or more factors. In general, the appeal of fractional factorial designs increases as the number of factors becomes larger. By contrast, individual experiments and single factor designs always alias main effects and all interactions from the two-way up to the k -way, no matter how many factors are involved.

Strategic aliasing and designating negligible effects

A useful starting point for choosing a reduced design is sorting all of the effects in the complete factorial into three categories: (1) effects that are of primary scientific interest and therefore are to be estimated; (2) effects that are expected to be zero or negligible; and (3) effects that are not of primary scientific interest but may be non-negligible. Strategic aliasing involves ensuring that effects of primary scientific interest are aliased only with negligible effects. There may be non-negligible effects that are not of scientific interest. Resources are not to be devoted to estimating such effects, but care must be taken not to alias them with effects of primary scientific interest.

Considering which, if any, effects to place in the negligible category is likely to be an unfamiliar, and perhaps in some instances uncomfortable, process for some social and behavioral scientists. However, the choice is critically important. On the one hand, when more effects are designated negligible the available options will in general include designs involving smaller numbers of experimental conditions; on the other hand, incorrectly designating effects as negligible can threaten the validity of scientific conclusions. The best bases for making assumptions about negligible effects are theory and prior empirical research. Yet there are few areas in the social and behavioral sciences in which theory makes specific predictions about higher-order interactions, and it appears that to date there has been relatively little empirical investigation of such interactions. Given this lack of guidance, on what basis can an investigator decide on assumptions?

A very cautious approach would be to assume that each and every interaction up to the k -way interaction is likely to be sizeable, unless there is empirical evidence or a compelling theoretical basis for assuming that it is negligible. This is equivalent to leaving the negligible category empty and designating each effect either of primary scientific interest or non-negligible. There are two strategies consistent with this perspective. One is to conduct a complete factorial experiment, being careful to ensure adequate statistical power to detect any interactions of scientific interest. The other strategy consistent with assuming all interactions are likely to be sizeable is to frame research questions only about simple effects that can reasonably be estimated with the individual experiments or single factor approaches. For example, as discussed above the aliasing associated with the comparative treatment design may not be an issue if research questions are framed in terms of simple effects.

If these cautious strategies seem too restrictive, another possibility is to adopt some heuristic guiding principles (see Wu & Hamada, 2000 ) that are used in engineering research for informing the choice of assumptions and aliasing structure and to help target resources in areas where they are likely to result in the most scientific progress. The guiding principles are intended for use when theory and prior research are unavailable; if guidance from these sources is available it should always be applied first. One guiding principle is called Hierarchical Ordering . This principle states that when resources are limited, the first priority should be estimation of lower order effects. Thus main effects are the first investigative priority, followed by two-way interactions. As Green and Rao (1971) noted, “…in many instances the simpler (additive) model represents a very good approximation of reality” (p. 359), particularly if measurement quality is good and floor and ceiling effects can be avoided. Another guiding principle is called Effect Sparsity ( Box & Meyer, 1986 ), or sometimes the Pareto Principle in Experimental Design ( Wu & Hamada, 2000 ). This principle states that the number of sizeable and important effects in a factorial experiment is small in comparison to the overall number of effects. Taken together, these principles suggest that unless theory and prior research specifically suggest otherwise, there are likely to be relatively few sizeable interactions except for a few two-way interactions and even fewer three-way interactions, and that aliasing the more complex and less interpretable higher-order interactions may well be a good choice.

Resolution of fractional factorial designs

Some general information about aliasing of main effects and two-way interactions is conveyed in a fractional factorial design's resolution ( Wu & Hamada, 2000 ). Resolution is designated by a Roman numeral, usually either III, IV, V or VI. The aliasing of main effects and two-way interactions in these designs is shown in Table 3 . As Table 3 shows, as design resolution increases main effects and two-way interactions become increasingly free of aliasing with lower-order interactions. Importantly, no design that is Resolution III or higher aliases main effects with other main effects.

Table 3. Resolution of Fractional Factorial Designs and Aliasing of Effects.

Table 3 shows only which effects are not aliased with main effects and two-way interactions. Which and how many effects are aliased with main effects and two-way interactions depends on the exact design. For example, consider a 2 6−2 fractional factorial design. As mentioned previously, this is a 1/4 fraction design, so each source of variance has four aliases; thus each main effect is aliased with three other effects. Suppose this design is Resolution IV. Then none of the three effects aliased with the main effect will be another main effect or a two-way interaction. Instead, they will be three higher-order interactions.

According to the Hierarchical Ordering and Effect Sparsity principles, in the absence of theory or evidence to the contrary it is reasonable to make the working assumption that higher-order interactions are less likely to be sizeable than lower-order interactions. Thus, all else being equal, higher resolution designs, which alias scientifically important main effects and two-way interactions with higher-order interactions, are preferred to lower resolution designs, which alias these effects with lower-order interactions or with main effects. This concept has been called the maximum resolution criterion by Box and Hunter (1961) .

In general higher resolution designs tend to require more experimental conditions, although for a given number of experimental conditions there may be design alternatives with different resolutions.

Relative resource requirements of the four design alternatives

Number of experimental conditions and subjects required.

The four design options considered here can vary widely with respect to the number of experimental conditions that must be implemented and the number of subjects required to achieve a given statistical power. These two resource requirements must be considered separately. In single factor experiments, the number of subjects required to perform the experiment is directly proportional to the number of experimental conditions to be implemented. However, when comparing different designs in a multi-factor framework this is not the case. For instance, a complete factorial may require many more experimental conditions than the corresponding individual experiments or single factor approach, yet require fewer total subjects.

Table 4 shows how to compute a comparison of the number of experimental conditions required by each of the four design alternatives. As Table 4 indicates, the individual experiments, single factor and fractional factorial approaches are more economical than the complete factorial approach in terms of number of experimental conditions that must be implemented. In general, the single factor approach requires the fewest experimental conditions.

Table 4. Aliasing and Economy of Four Design Approaches with k 2-level Independent Variables.

N = total sample size required to maintain desired level of power in complete factorial design.

Table 4 also provides a comparison of the minimum number of subjects required to maintain the same level of statistical power. Suppose a total of k factors are to be investigated, with the smallest effect size among them equal to d , and that a total minimum sample size of N is required in order to maintain a desired level of statistical power at a particular Type I error rate. The effect size d might be the expected normalized difference between two means, or it might be the smallest normalized difference considered clinically or practically significant. (Note that in practice there must be at least one subject per experimental condition, so at a minimum N must at least equal the number of experimental conditions. This may require additional subjects beyond the number needed to achieve a given level of power when implementing complete factorial designs with large k .) Table 4 shows that the complete factorial and fractional factorial designs are most economical in terms of sample size requirements. In any balanced factorial design each main effect is estimated using all subjects, averaging across the other main effects. In the hypothetical three-factor example, the main effects of choose , breath and prep are each based on all N subjects, with the subjects sorted differently into treatment and control groups for each main effect estimate. For example, Table 2 shows that in both the complete and fractional factorial designs a subject assigned to experimental condition 3 is in the Off group for the purpose of estimating the main effects of choose and prep but in the On group for the purpose of estimating the main effect of breath .